logback 日志异步化输出对性能的影响

场景

Java在每次请求结束后都会输出日志记录每次请求的相关信息,一个QPS对应一次日志的输出。

异步化基本百利而无一害,特定的场景、机器下可以数倍提升效率

结论

- 异步化对性能的影响取决于日志的多少和机器CPU的核数

- logback日志异步化主要是写日志逻辑变成了单线程,没有锁

- 异步化后性能有10-15%的提升(Profiling看到日志相关的CPU占比从13%降到6.5%)

- 异步输出条件下,日志多少对性能的影响有,但是不明显(15%以内)

- 如果是同步输出日志,开启延迟flush log(

false //flush policy)能有5%的性能提升 - 异步化后再开启延迟flush log对性能提升不明显(Profiling看到log flush的CPU从1.2%降到0.4%)

- slf4j只是个接口框架,JUL/log4j2和logback是具体实现,logback是log4j的升级版

- 如果一秒钟日志输出达到6M(主要取决于条数),那么异步化能提升一倍的性能(日志太多的时候同步下CPU跑不满)

- 同步日志输出场景下瓶颈主要在同步锁而不是磁盘写日志(顺序写磁盘)

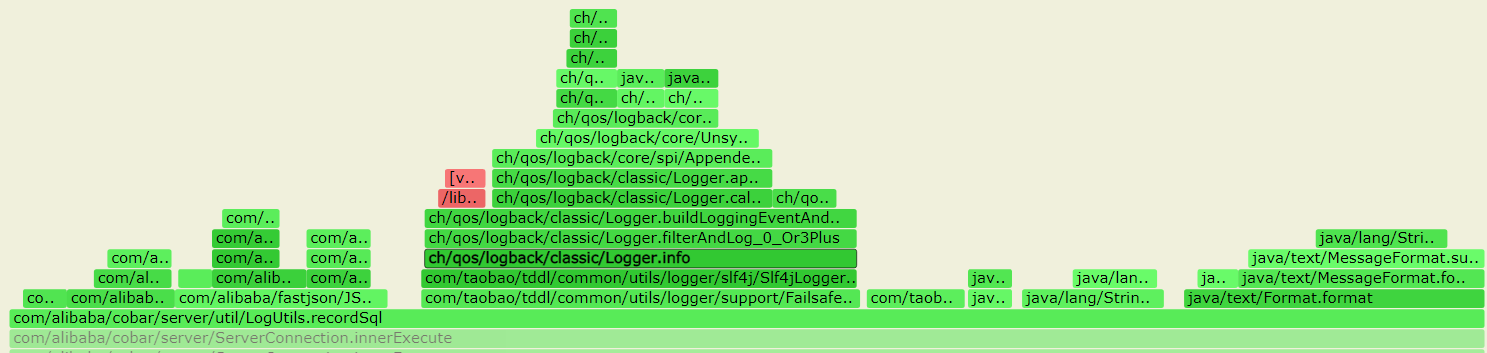

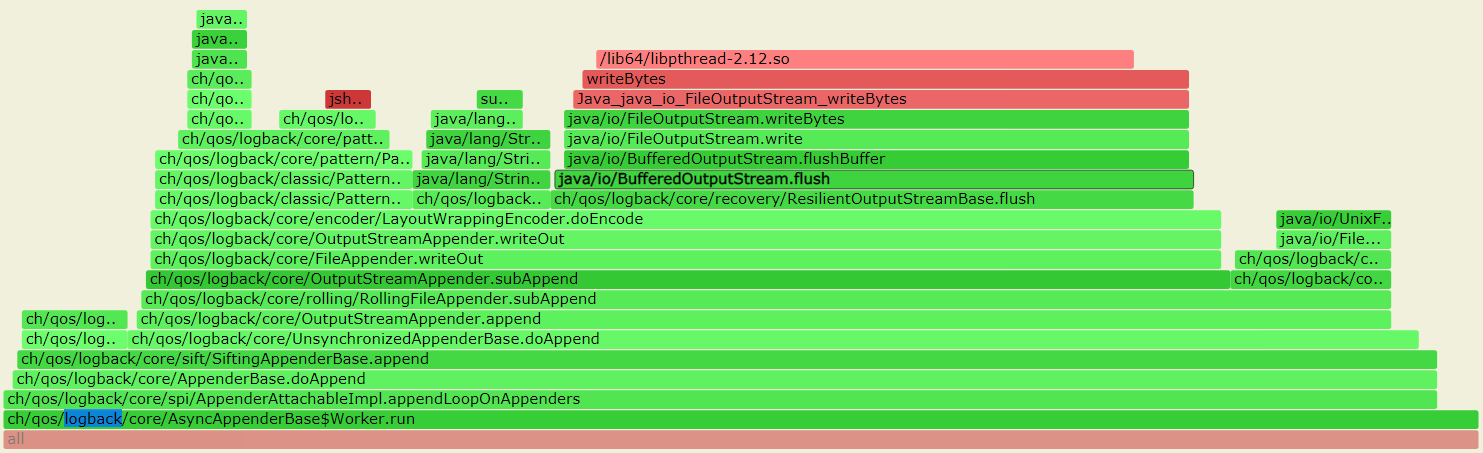

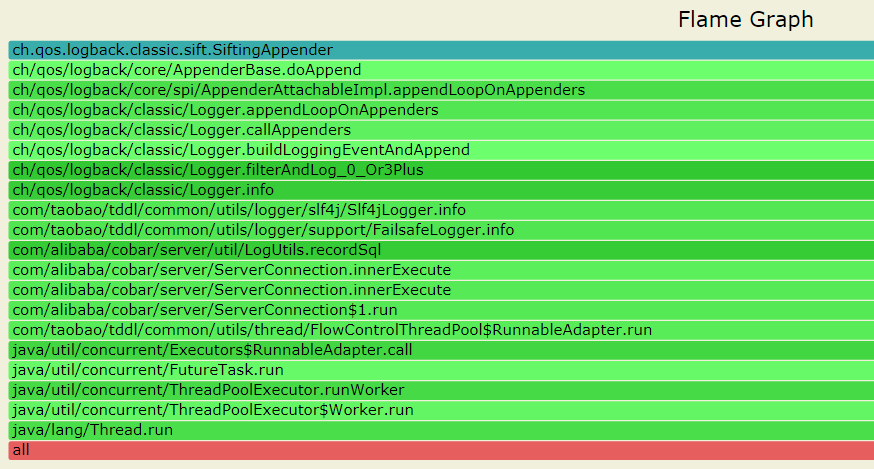

- 从Profiler堆栈来看异步后锁和日志输出部分占比明显降低

- CPU核数越多意味着并发越多,那么同步异步和immediateFlush的影响越明显

- 异步化输出日志后对avg rt 和 rt 95%线下降影响非常明显,也更稳定

- immediateFlush 对同步影响比较明显(一倍),主要是因为每次刷盘慢导致别的线程等锁时间长,在异步场景下基本不明显

- immediateFlush为false有丢日志的风险,异步后没有必要再设immediateFlush为false

- 延迟Flush的cache取决于JDK的BufferedOutputStream缓冲大小,默认8K,不可更改

- 异步后日志输出的瓶颈在于单核能力,Intel(R) Xeon(R) Platinum 8163 CPU @ 2.50GHz 输出能力大概是每秒20万条日志

测试数据

4核的机器下性能提升没这么明显,因为锁争抢没这么激烈

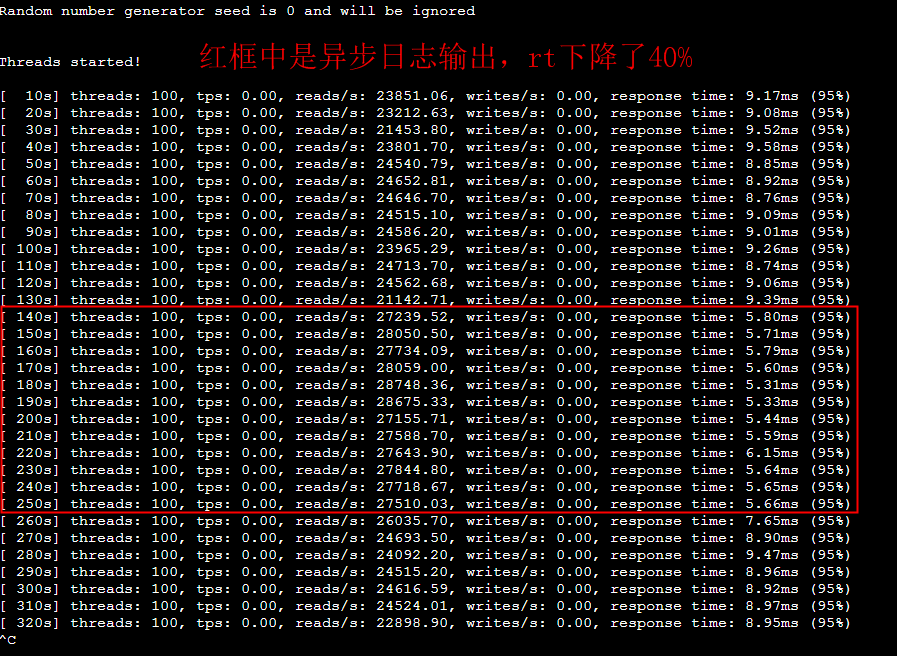

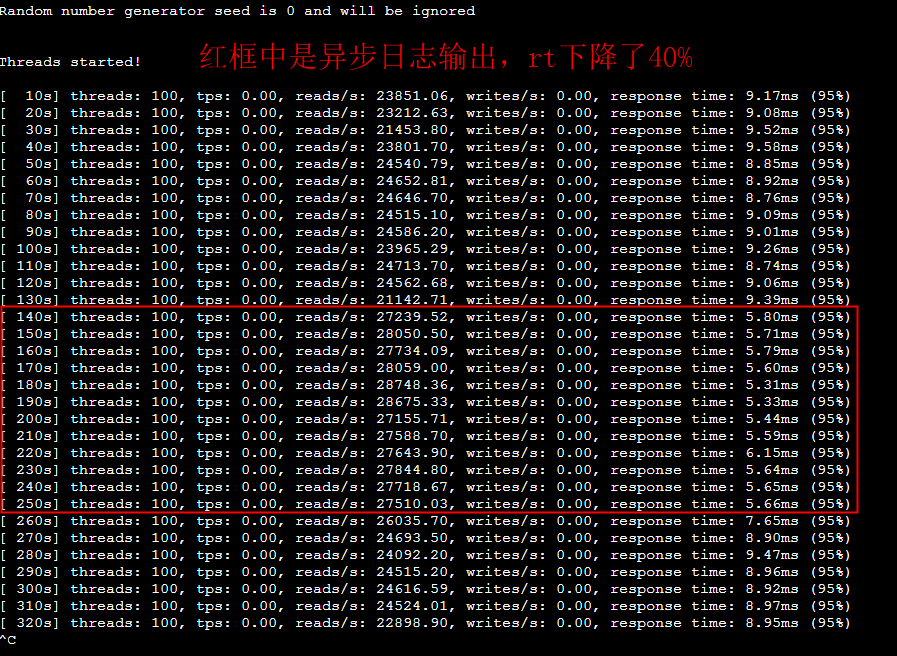

4.9内核下, 异步对rt影响明显, 似乎是4.9对锁的处理更好:

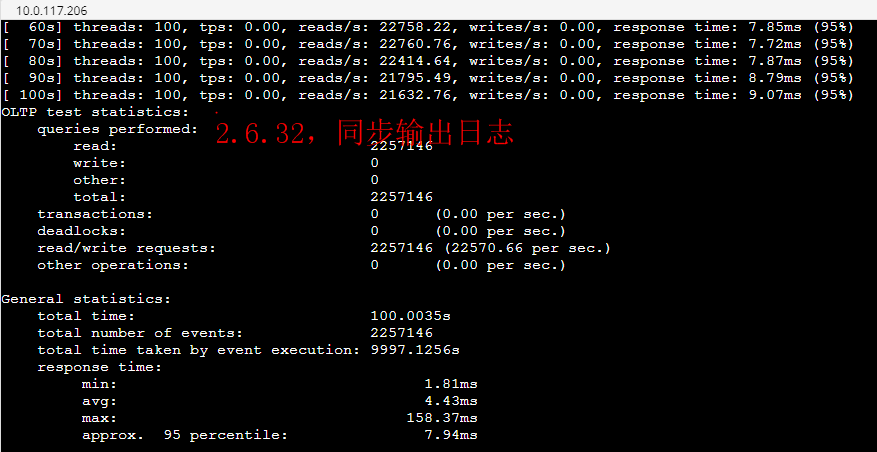

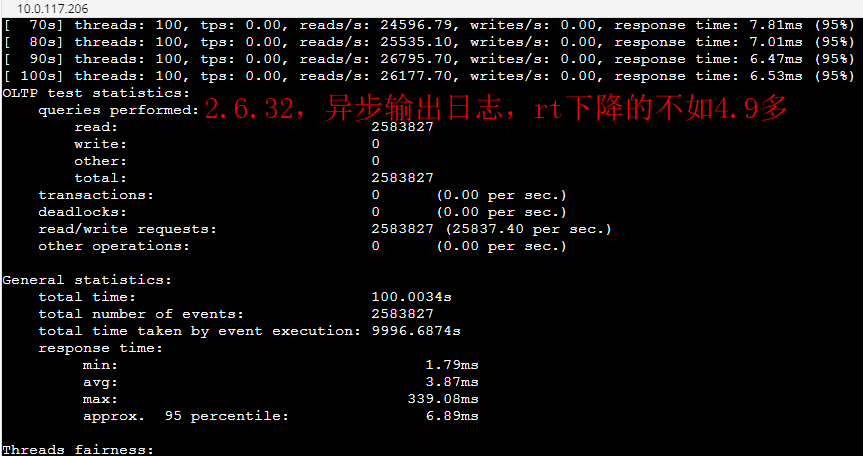

2.6.32下异步对rt影响不是很大

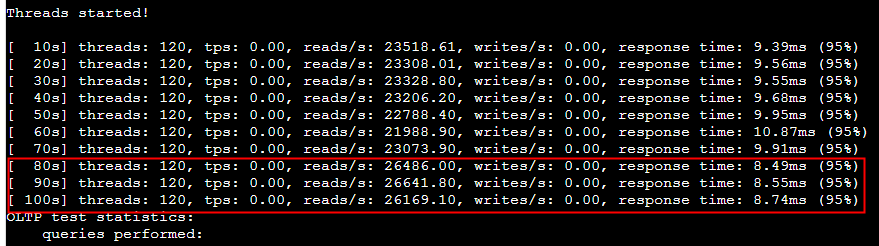

加大120线程并发,可以看到tps提升明显但是rt仍然不明显

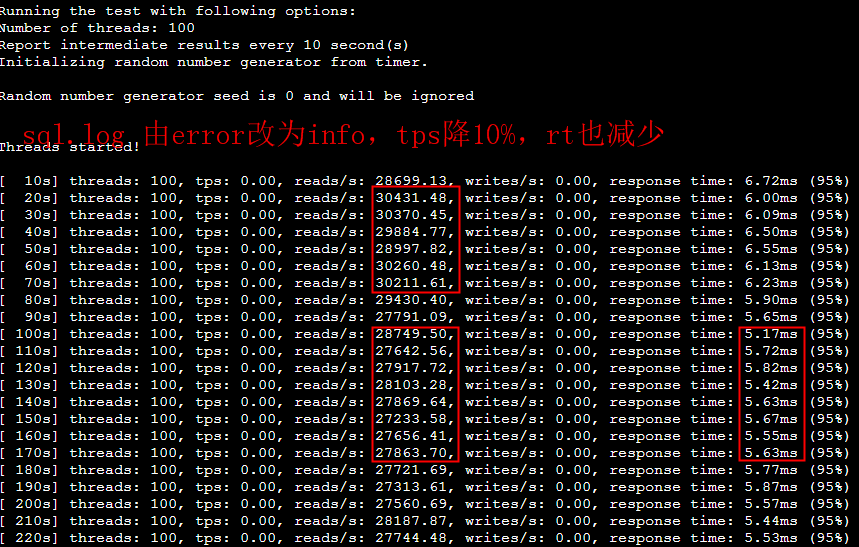

如果将 sql.log 改为error级别,tps上升到30000,rt比info也有将近10%的提升,这个rt的提升是因为tps提升导致的。(都是异步输出的场景下)

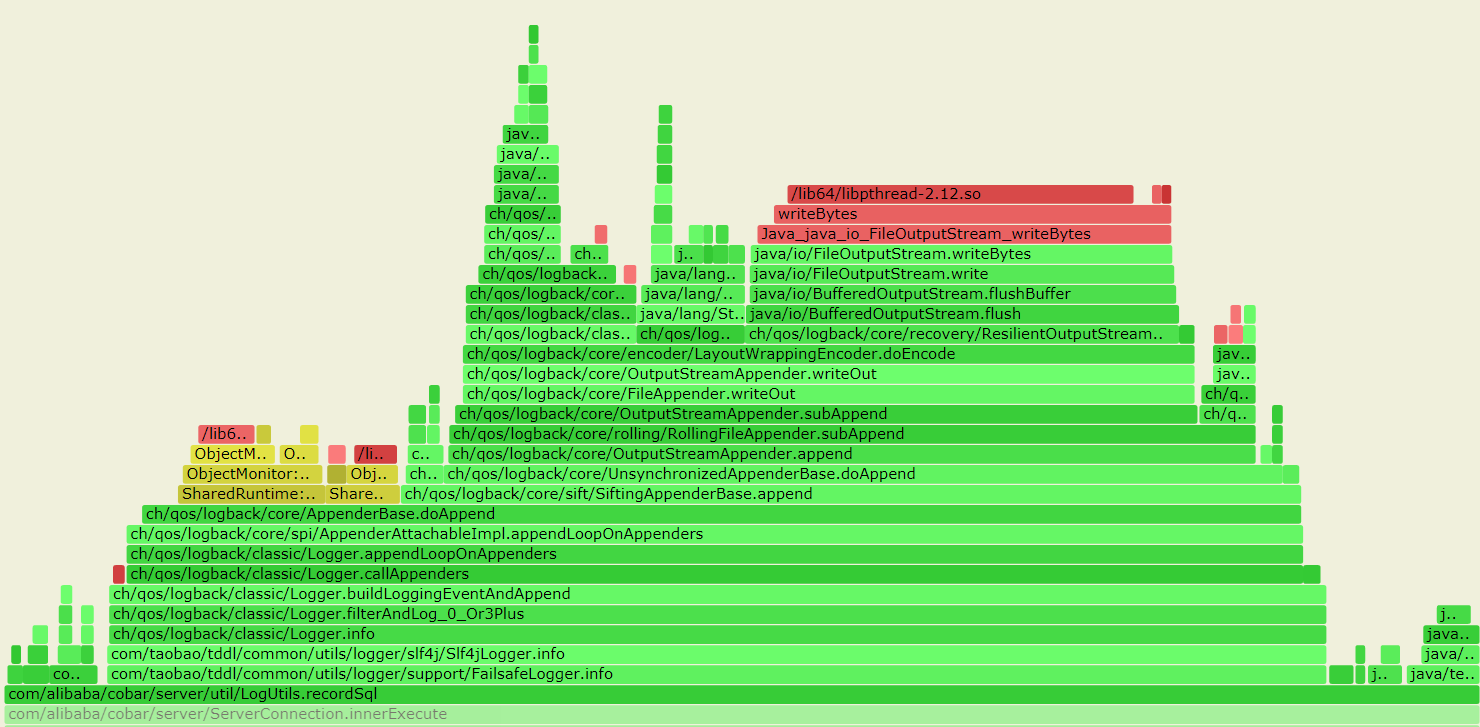

同步情况下的profiler

recordSQL: 12.9%

logback.doAppend: 10%

异步情况下的profiler:

recordSQL: 3.7%

logback.doAppend: 2.63%

在16个core的机器上锁争抢更明显

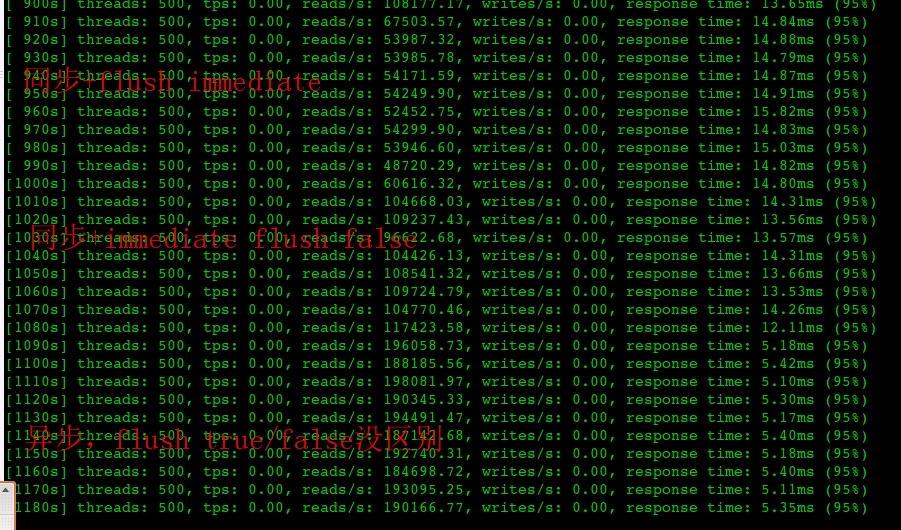

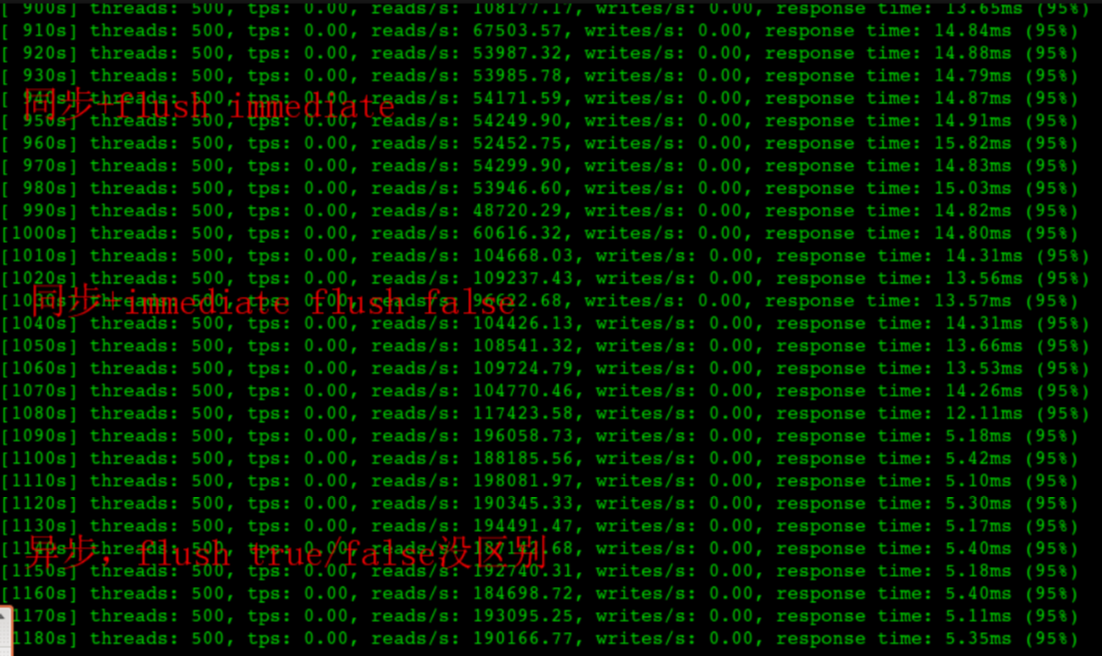

同步和异步以及immediateFlush的影响

16core的机器

结论:同步输出的情况下immediateFlush 为false性能有一倍的提升(但是异常退出的情况下有丢日志风险)

异步输出是同步的4倍(这个差异依赖于cpu核数、业务逻辑的特点等),在异步的情况下immediateFlush无所谓,所以王者还是异步输出,同时异步输出对rt 95%线下降非常明显

一个业务逻辑稍微慢点的场景

异步输出日志点查场景tps11万+,同步输出日志后点查tps4万+,同时jstack堆栈也能看到333个BLOCKED堆栈:

1 | #[ 210s] threads: 400, tps: 0.00, reads/s: 115845.43, writes/s: 0.00, response time: 7.57ms (95%) |

immediateFlush true/false 以及同步异步对tps的影响

结论:同步输出的情况下immediateFlush 为false性能有一倍的提升(但是异常退出的情况下有丢日志风险)异步输出是同步的4倍(这个差异依赖于cpu核数、业务逻辑的特点等),在异步的情况下immediateFlush无所谓,所以王者还是异步输出,同时异步输出对rt 95%线下降非常明显

异步配置

<appender name="asyncROOT" class="ch.qos.logback.classic.AsyncAppender">

<queueSize>1000</queueSize>

<maxFlushTime>3000</maxFlushTime>

<discardingThreshold>0</discardingThreshold>

<neverBlock>true</neverBlock>

<appender-ref ref="ROOT"/>

</appender>

JDK中BufferedOutputStream Buffer大小

/**

* Creates a new buffered output stream to write data to the

* specified underlying output stream.

*

* @param out the underlying output stream.

*/

public BufferedOutputStream(OutputStream out) {

this(out, 8192);

}

尝试改大buffer基本没什么明显的影响

测试环境2个节点的DRDS-Server,每个节点4Core8G(机型sn1)

| tps | 100秒每个节点输出日志大小 | |

|---|---|---|

| 不输出日志 | 35097 | |

| sql.log+同步 | 28891 | 292M |

| sql.log+异步 | 32164 | 292M |

| sql.log+com.taobao/trace+异步 | 28894 | 670M |

| sql.log+com.taobao/trace+同步 | 13248 |

com.taobao/trace 指的是将com.taobao.*设为trace输出,以增加输出日志量。

是否开启immediateFlush(默认true)

| tps | 100秒每个节点输出日志大小 | |

|---|---|---|

| 同步+immediateFlush | 27610 | 282M |

| 同步 | 29554 | 303M |

| 异步+immediateFlush | 31100 | 245M |

| 异步 | 31150 | 260M |

(这个表格和前面的表格整体tps不一致,前一个表格是晚上测试,这个表格是上午测试的,不清楚是否环境受到了影响)

总结

关键结论见最前面,但是要结合自己场景输出日志的速度,日志输出越少影响越不明显,机器核数越多会越明显,总的原因就是logback的 AppenderBase的doAppend()函数需要同步

public synchronized void doAppend(E eventObject)

案例

Logback 异步日志处理能力分析

环境信息

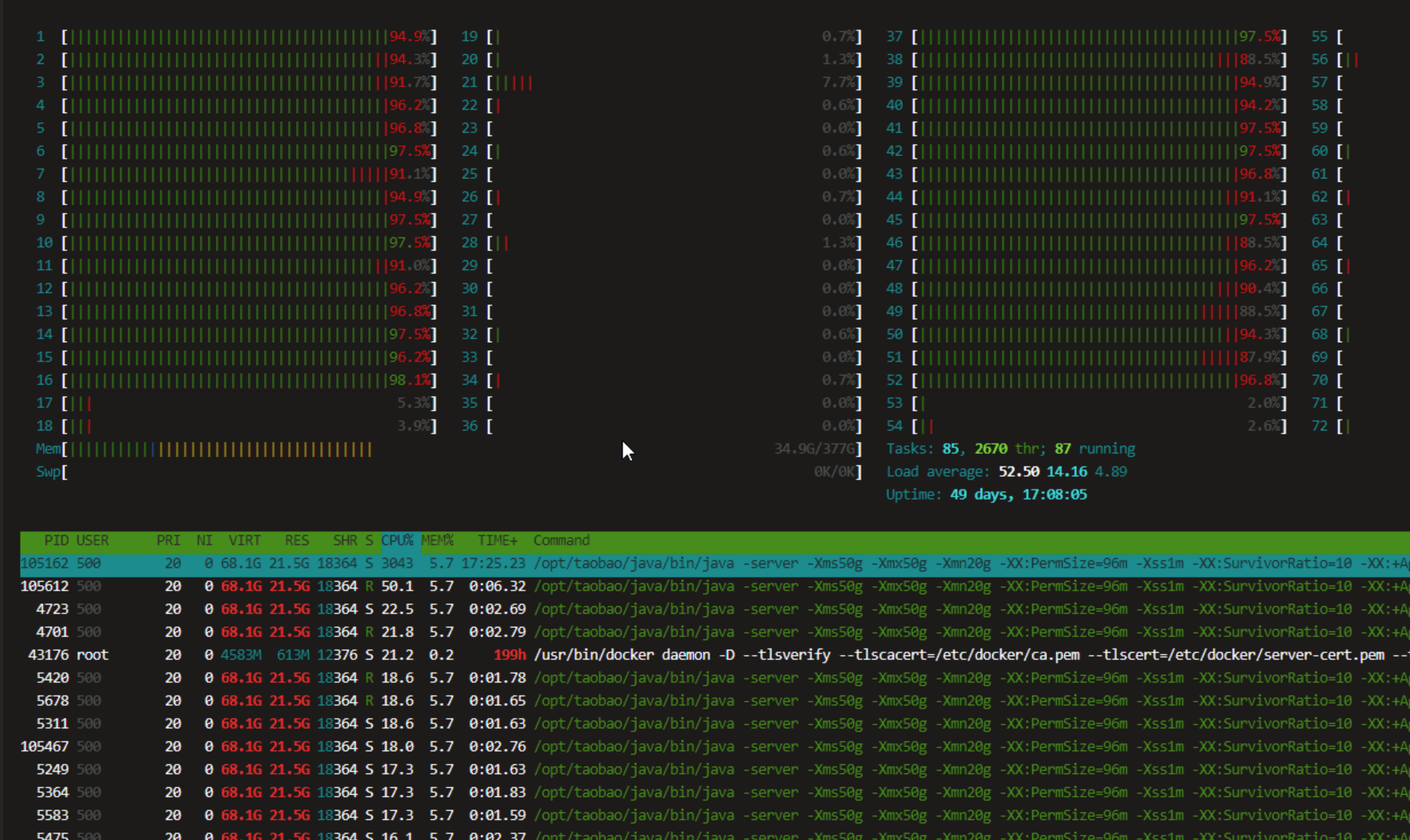

32C128G

单条日志较大 len=1137

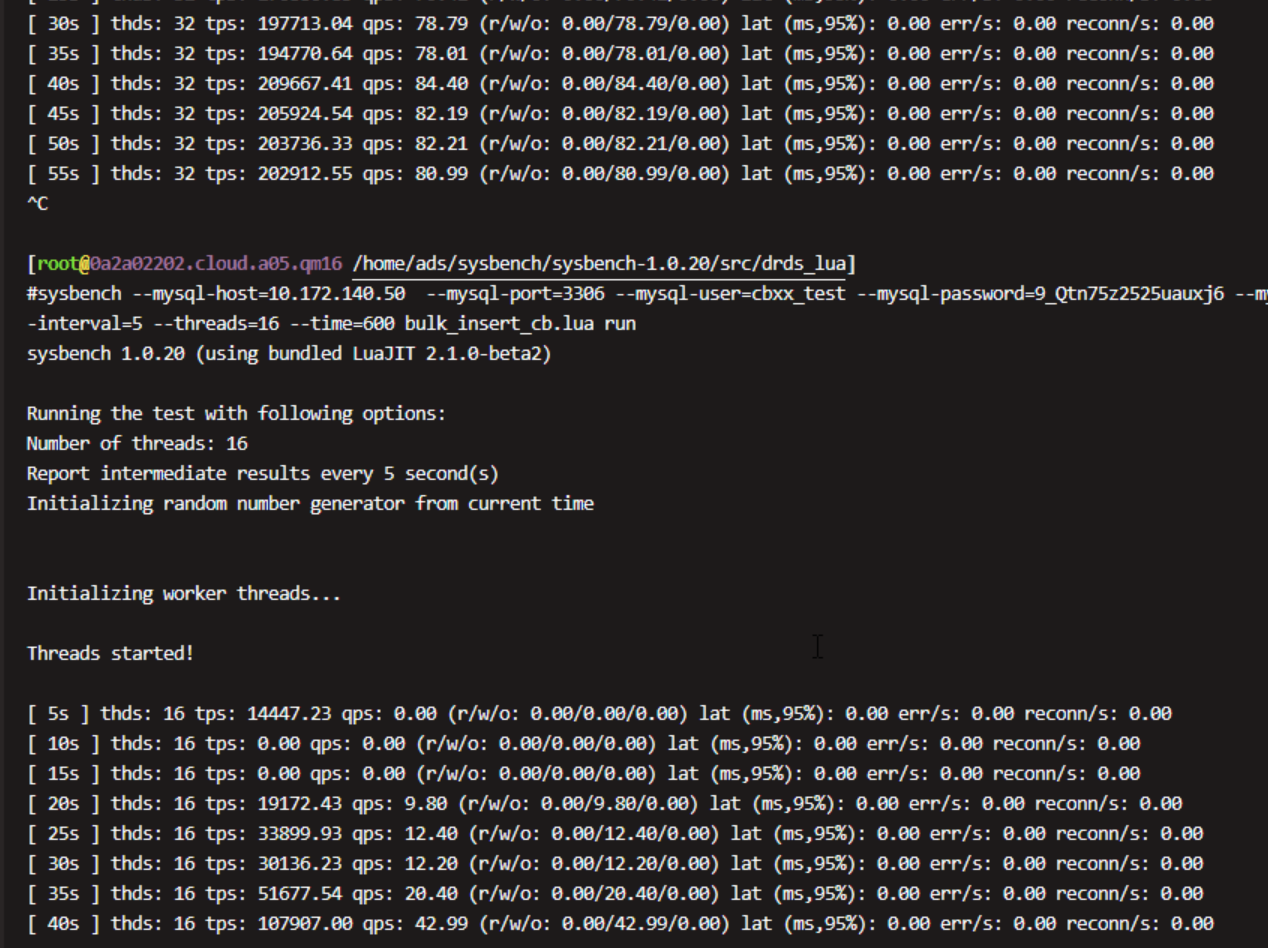

top

1 | PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND |

日志内容

1 | [len=1137] select 1 /*skkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkk*/ |

平均每分钟输出日志内容 13个512MB日志文件,每秒100MB的输出能力

每条日志len=150

1 | [len=150] SELECT 1 /*skkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkkk*/ |

减小日志

1 | [len=46] SELECT 1 /*skkkkkkkkkkkkkkkkkkkkkkkkkkkkkkk*/ |

select 1日志

1 | [len=8] SELECT 1 |

点查日志

1 | [len=43] SELECT *, pad FROM sbtest1 WHERE id=5000089 |

后端RDS快打满了

案例总结

len表示日志长度

| len=43 ,点查 | len=8 select 1 | [len=150] SELECT 1 | [len=1137] select 1 | |

|---|---|---|---|---|

| QPS | 12万(rds瓶颈) | 18.6万(写日志单线程瓶颈) | 18.5万 | 8.5万(磁盘瓶颈) |

因为调度问题 异步写日志进程很难跑满CPU, 最多跑到80%左右

单核按条输出能力在 18万条每秒左右(Intel(R) Xeon(R) Platinum 8269CY CPU @ 2.50GHz)

如果日志太大会受限于物理磁盘写出能力,测试环境最大输出能力是每秒 100MB

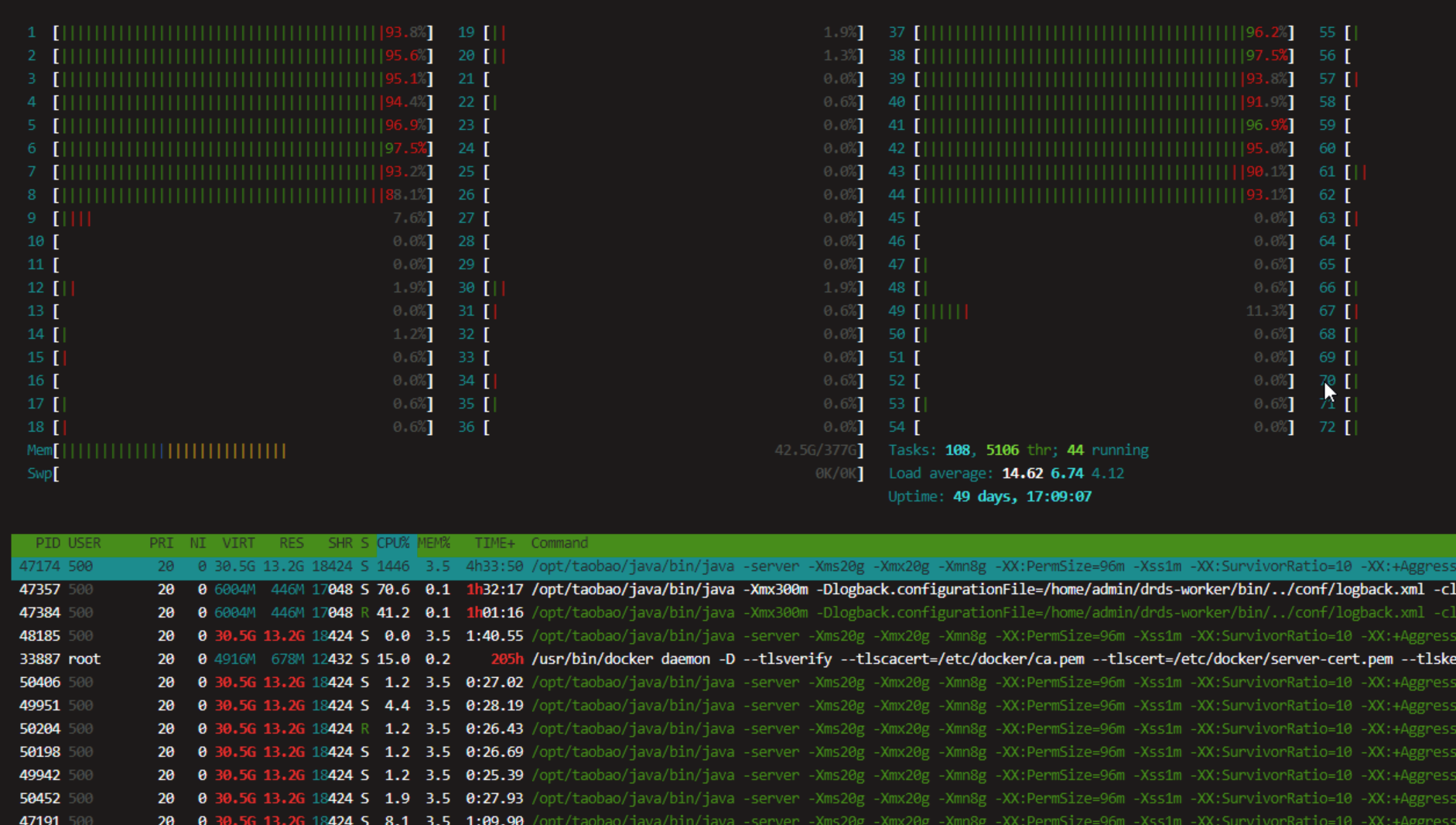

16core VS 32 Core 案例比较

如果都是用一半超线程,32 Core基本能达到16Core的1.9倍性能

下图上半部分是32C,下半部分是16C

对应CPU状态

如果都改用物理核后,32Core VS 16Core是 25万 VS 15万,大概提升有1.67倍,不到2倍

同时看到perf 也是打了7折,这应该是core太多后应用代码内部锁竞争太激励

横向比较

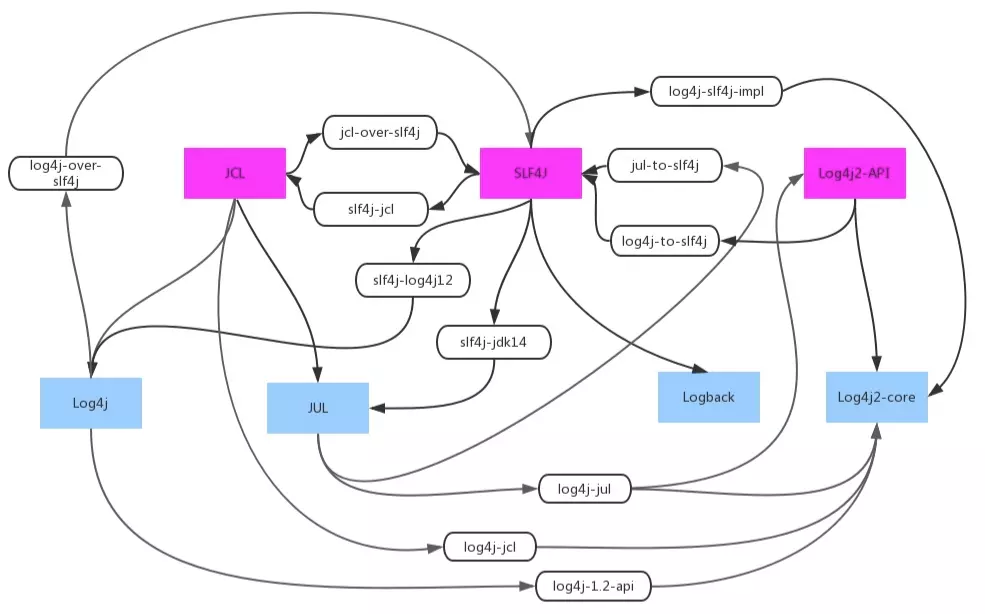

logback、log4j2等横向关系和性能比较分析

日志框架

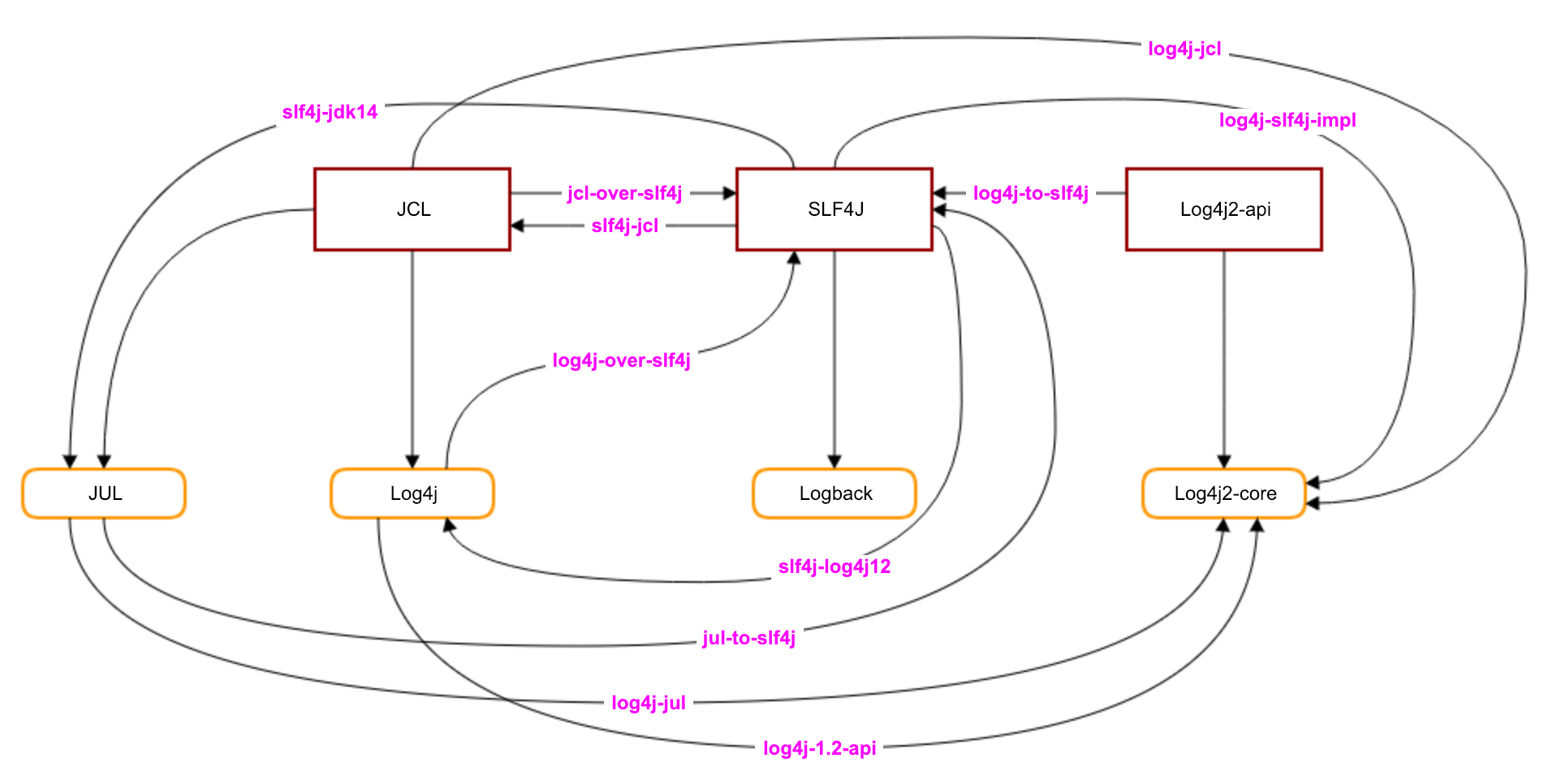

紫色为接口类,蓝色为实现,白色为转换

log4j-over-slf4j和slf4j-log4j12之所以不能共存,是因为它俩可以造成循环依赖!从图中可以看到,有不少会造成循环依赖的场景,比如jul-to-slf4j与slf4j-jdk14、jcl-over-slf4j与JCL等,有些jar的共存会让调用方产生困惑,不知道具体调用哪个

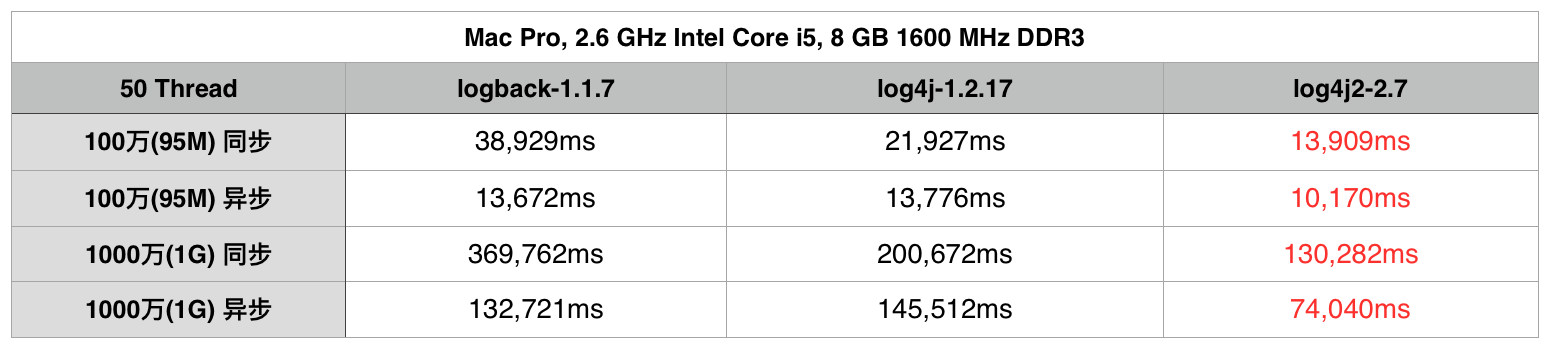

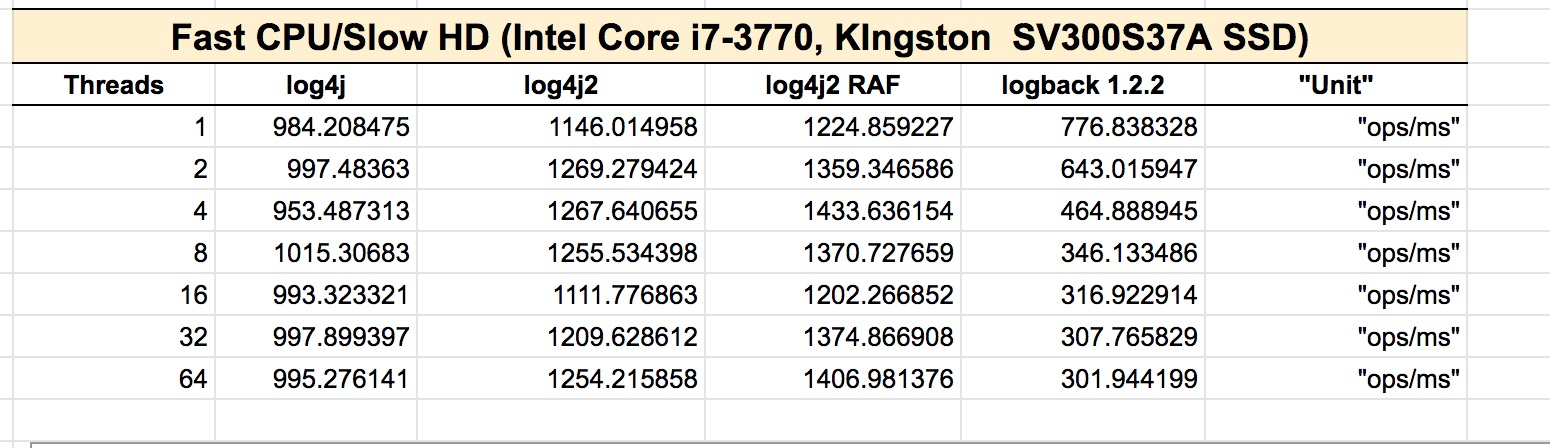

性能比较

- 可见在同步日志模式下, Logback的性能是最糟糕的.

- 而log4j2的性能无论在同步日志模式还是异步日志模式下都是最佳的.

其根本原因在于log4j2使用了LMAX, 一个无锁的线程间通信库代替了, logback和log4j之前的队列. 并发性能大大提升。有兴趣的同学,可以深入探索。

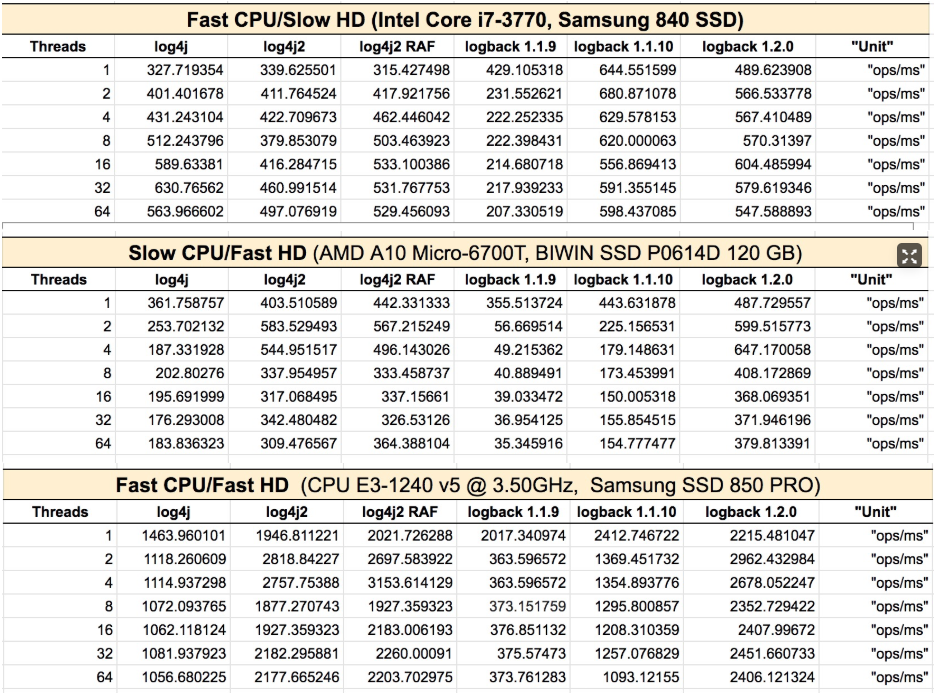

来自log4j2官方的比较数据(同步,在不同的瓶颈下)

https://logging.apache.org/log4j/2.x/performance.html:

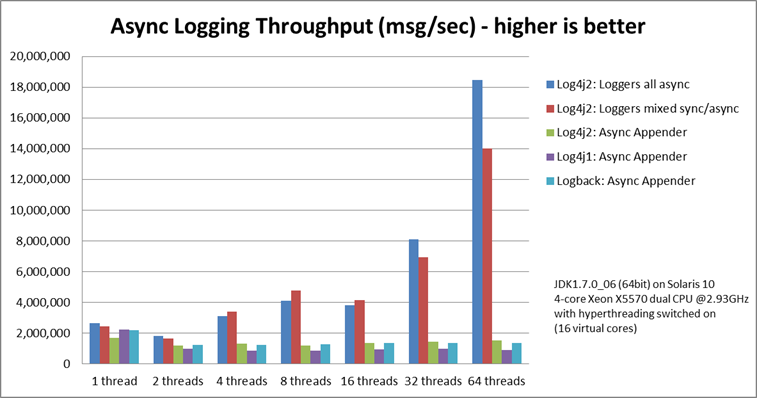

异步场景下的性能比较

AsyncAppender to FileAppender

Log4j2中的异步日志(AsyncAppender)

默认用ArrayBlockingQueue.队列大小为128.

关于ArrayBlockingQueue

ArrayBlockingQueue是一种地节省了空间,对于记日志有很好的适用性,同时避免内存的伸缩产生波动,也降低了GC的负担。入队出队时由内部的重入锁来控制并发,同时默认采用非公平锁的性质来处理活跃线程的闯入(Barge),从而提高吞吐量。

ArrayBlockingQueue在处理数据的入队提供了offer和put方法。两者的区别是:如果队列满了,offer直接返回给调用线程false, 而不用等待,这种场景较适合异步写日志,即使没有入队成功,仍然可以接受。而put方法则会让当前线程进入等待队列,并再次去竞争锁。

类似的,处理出队时提供了poll和take方法,区别也是是否阻塞调用线程。