Docker 常见问题

启动

docker daemon启动的时候如果报 socket错误,是因为daemon启动参数配置了: -H fd:// ,但是 docker.socket是disable状态,启动daemon依赖socket,但是systemctl又拉不起来docker.socket,因为被disable了,先 sudo systemctl enable docker.socket 就可以了。

如果docker.socket service被mask后比disable更粗暴,mask后手工都不能拉起来了,但是disable后还可以手工拉起,然后再拉起docker service。 这是需要先 systemctl unmask

1 | $sudo systemctl restart docker.socket |

另外 docker.socket 启动依赖环境的要有 docker group这个组,可以添加: groupadd docker

failed to start docker.service unit not found. rhel 7.7

systemctl list-unit-files |grep docker.service 可以看到docker.service 是存在并enable了

实际是redhat 7.7的yum仓库所带的docker启动参数变了, 如果手工启动的话也会报找不到docker-runc 手工:

2

>

https://access.redhat.com/solutions/2876431 https://stackoverflow.com/questions/42754779/docker-runc-not-installed-on-system

yum安装docker会在 /etc/sysconfig 下放一些配置参数(docker.service 环境变量)

Docker 启动报错: Error starting daemon: Error initializing network controller: list bridge addresses failed: no available network

这是因为daemon启动的时候缺少docker0网桥,导致启动失败,手工添加:

1 | ip link add docker0 type bridge |

启动成功后即使手工删除docker0,然后再次启动也会成功,这次会自动创建docker0 172.30.0.0/16 。

1 | #systemctl status docker -l |

参考:https://github.com/docker/for-linux/issues/123

或者这样解决:https://stackoverflow.com/questions/39617387/docker-daemon-cant-initialize-network-controller

This was related to the machine having several network cards (can also happen in machines with VPN)

The solution was to start manually docker like this:

1 | /usr/bin/docker daemon --debug --bip=192.168.y.x/24 |

where the 192.168.y.x is the MAIN machine IP and /24 that ip netmask. Docker will use this network range for building the bridge and firewall riles. The –debug is not really needed, but might help if something else fails.

After starting once, you can kill the docker and start as usual. AFAIK, docker have created a cache config for that –bip and should work now without it. Of course, if you clean the docker cache, you may need to do this again.

本机网络信息默认保存在:/var/lib/docker/network/files/local-kv.db 想要清理bridge网络的话,不能直接 docker network rm bridge 因为bridge是预创建的受保护不能直接删除,可以删掉:/var/lib/docker/network/files/local-kv.db 并且同时删掉 docker0 然后重启dockerd就可以了

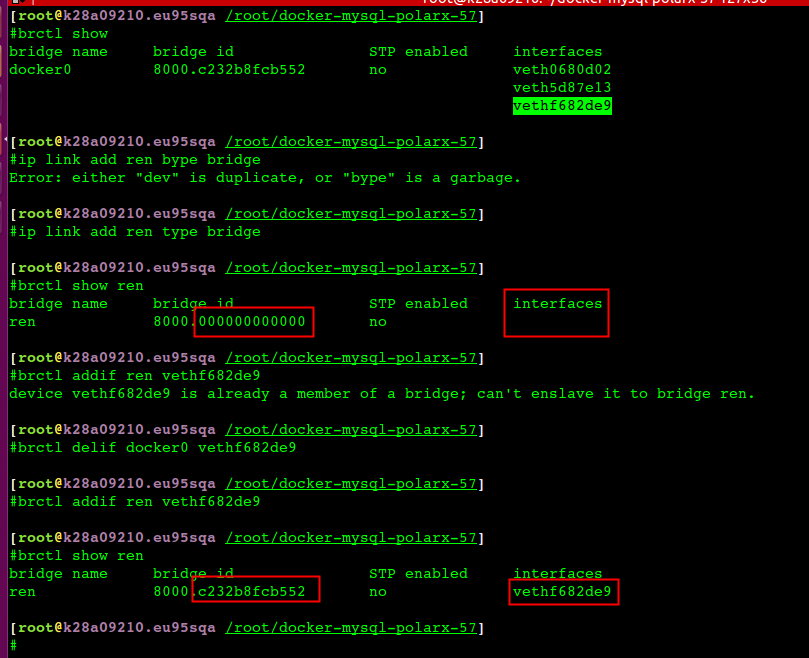

alios下容器里面ping不通docker0

alios上跑docker,然后启动容器,发现容器里面ping不通docker0, 手工重新brctl addbr docker0 , 然后把虚拟网卡加进去就可以了。应该是系统哪里bug了.

非常神奇的是不通的时候如果在宿主机上对docker0抓包就瞬间通了,停掉抓包就不通

猜测是 alios 的bug

systemctl start docker

Failed to start docker.service: Unit not found.

1 | UNIT LOAD PATH |

[Service]

Type=notify

Environment=PATH=/opt/kube/bin:/sbin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/usr/X11R6/bin:/opt/satools:/root/bin

容器没有systemctl

Failed to get D-Bus connection: Operation not permitted: systemd容器中默认无法启动,需要启动容器的时候

1 | docker run -itd --privileged --name=ren drds_base:centos init //init 必须要或者systemd |

1号进程需要是systemd(init 是systemd的link),才可以使用systemctl,推荐用这个来解决:https://github.com/gdraheim/docker-systemctl-replacement

systemd是用来取代init的,之前init管理所有进程启动,是串行的,耗时久,也不管最终状态,systemd主要是串行并监控进程状态能反复重启。

新版本init link向了systemd

busybox/Alpine/Scratch

busybox集成了常用的linux工具(nc/telnet/cat……),保持精细,方便一张软盘能装下。

Alpine一个精简版的Linux 发行版,更小更安全,用的musl libc而不是glibc

scratch一个空的框架,什么也没有

找不到shell

Dockerfile 中(https://www.ardanlabs.com/blog/2020/02/docker-images-part1-reducing-image-size.html):

1 | CMD ./hello OR RUN 等同于 /bin/sh -c "./hello", 需要shell, |

entrypoint VS cmd

dockerfile中:CMD 可以是命令、也可以是参数,如果是参数, 把它传递给:ENTRYPOINT

在写Dockerfile时, ENTRYPOINT或者CMD命令会自动覆盖之前的ENTRYPOINT或者CMD命令

从参数中传入的ENTRYPOINT或者CMD命令会自动覆盖Dockerfile中的ENTRYPOINT或者CMD命令

copy VS add

COPY指令和ADD指令的唯一区别在于是否支持从远程URL获取资源。 COPY指令只能从执行docker build所在的主机上读取资源并复制到镜像中。 而ADD指令还支持通过URL从远程服务器读取资源并复制到镜像中。

满足同等功能的情况下,推荐使用COPY指令。ADD指令更擅长读取本地tar文件并解压缩

Digest VS Image ID

pull镜像的时候,将docker digest带上,即使黑客使用手段将某一个digest对应的内容强行修改了,docker也能check出来,因为docker会在pull下镜像的时候,只要根据image的内容计算sha256

1 | docker images --digests |

- The “digest” is a hash of the manifest, introduced in Docker registry v2.

- The image ID is a hash of the local image JSON configuration. 就是inspect 看到的 RepoDigests

容器中抓包和调试 – nsenter

1 | 获取pid:docker inspect -f {{.State.Pid}} c8f874efea06 |

nsenter相当于在setns的示例程序之上做了一层封装,使我们无需指定命名空间的文件描述符,而是指定进程号即可,详细case

1 | #docker inspect cb7b05d82153 | grep -i SandboxKey //根据 pause 容器id找network namespace |

创建虚拟网卡

1 | To make this interface you'd first need to make sure that you have the dummy kernel module loaded. You can do this like so: |

修改网卡名字

1 | ip link set ens33 down |

OS版本

搞Docker就得上el7, 6的性能太差了 Docker 对 Linux 内核版本的最低要求是3.10,如果内核版本低于 3.10 会缺少一些运行 Docker 容器的功能。这些比较旧的内核,在一定条件下会导致数据丢失和频繁恐慌错误。

清理mount文件

删除 /var/lib/docker 目录如果报busy,一般是进程在使用中,可以fuser查看哪个进程在用,然后杀掉进程;另外就是目录mount删不掉问题,可以 mount | awk ‘{ print $3 }’ |grep overlay2| xargs umount 批量删除

No space left on device

OSError: [Errno 28] No space left on device:

大部分时候不是真的磁盘没有空间了还有可能是inode不够了(df -ih 查看inode使用率)

尝试用 fallocate 来测试创建文件是否成功

尝试fdisk-l / tune2fs -l 来确认分区和文件系统的正确性

fallocate 创建一个文件名很长的文件失败(也就是原始报错的文件名),同时fallocate 创建一个短文件名的文件成功

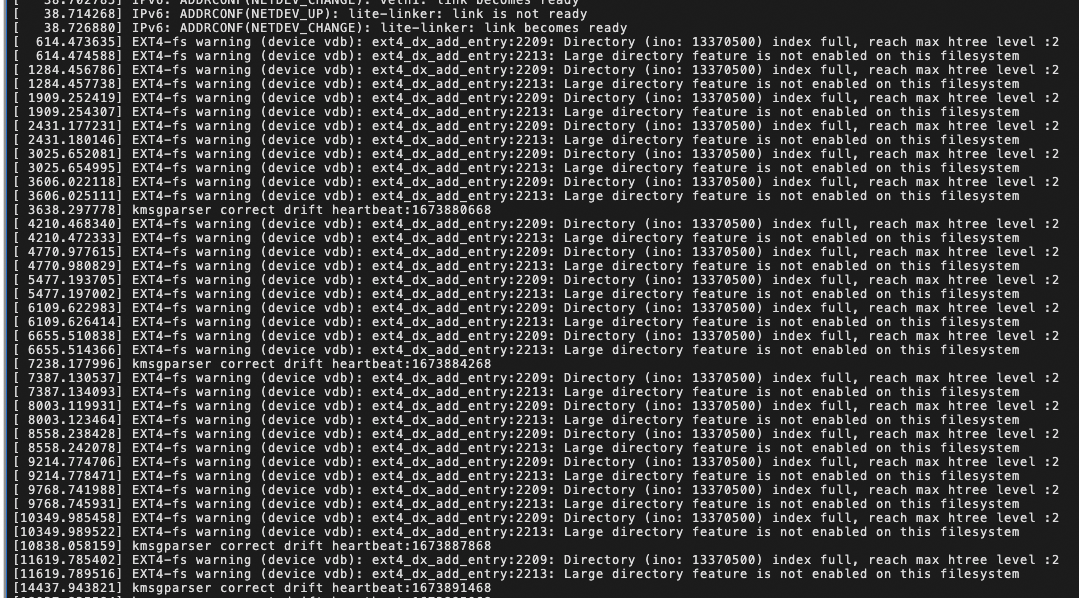

dmesg 查看系统报错信息

1 | [13155344.231942] EXT4-fs warning (device sdd): ext4_dx_add_entry:2461: Directory (ino: 3145729) index full, reach max htree level :2 |

看起来是小文件太多撑爆了ext4的BTree索引,通过 tune2fs -l /dev/nvme1n1p1 验证下

1 | #tune2fs -l /dev/nvme1n1p1 |grep Filesystem |

执行 tune2fs -O large_dir /dev/nvme1n1p1 打开 large_dir 选项

1 | tune2fs -l /dev/nvme1n1p1 |grep -i large |

如上所示,开启后Filesystem features 多了 large_dir,不过4.13以上内核才支持这个功能

CPU 资源分配

对于cpu的限制,Kubernetes采用cfs quota来限制进程在单位时间内可用的时间片。当独享和共享实例在同一台node节点上的时候,一旦实例的工作负载增加,可能会导致独享实例工作负载在不同的cpu核心上来回切换,影响独享实例的性能。所以,为了不影响独享实例的性能,我们希望在同一个node上,独享实例和共享实例的cpu能够分开绑定,互不影响。

内核的默认cpu.shares是1024,也可以通过 cpu.cfs_quota_us / cpu.cfs_period_us去控制容器规格(除后的结果就是核数)

cpu.shares 多层级限制后上层有更高的优先级,可能会经常看到 CPU 多核之间不均匀的现象,部分核总是跑不满之类的。 cpu.shares 是用来调配争抢用,比如离线、在线混部可以通过 cpu.shares 多给在线业务

给容器限制16core的quota:

1 | docker update --cpu-quota=1600000 --cpu-period=100000 c1 c2 |

sock

docker有两个sock,一个是dockershim.sock,一个是docker.sock。dockershim.sock是由实现了CRI接口的一个插件提供的,主要把k8s请求转换成docker请求,最终docker还是要 通过docker.sock来管理容器。

kubelet —CRI—-> docker-shim(kubelet内置的CRI-plugin) –> docker

docker image api

1 | 获取所有镜像名字: GET /v2/_catalog |

从registry中删除镜像

默认registry仓库不支持删除镜像,修改registry配置来支持删除

1 | #cat config.yml |

然后通过API来查询要删除镜像的id:

1 | //查询要删除镜像的tag |

检查是否restart能支持只重启deamon,容器还能正常运行

1 | $sudo docker info | grep Restore |

参考资料

https://www.ardanlabs.com/blog/2020/02/docker-images-part1-reducing-image-size.html