上下文切换的代价

概念

进程切换、软中断、内核态用户态切换、CPU超线程切换

内核态用户态切换:还是在一个线程中,只是由用户态进入内核态为了安全等因素需要更多的指令,系统调用具体多做了啥请看:https://github.com/torvalds/linux/blob/v5.2/arch/x86/entry/entry_64.S#L145

软中断:比如网络包到达,触发ksoftirqd(每个核一个)进程来处理,是进程切换的一种

进程切换是里面最重的,少不了上下文切换,代价还有进程阻塞唤醒调度。另外进程切换有主动让出CPU的切换、也有时间片用完后被切换

CPU超线程切换:最轻,发生在CPU内部,OS、应用都无法感知

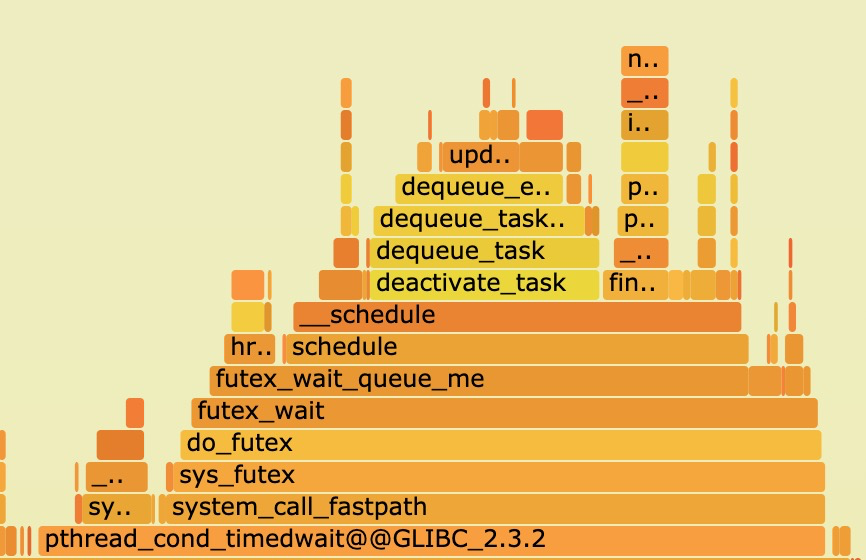

多线程调度下的热点火焰图:

上下文切换后还会因为调度的原因导致线程卡顿更久

Linux 内核进程调度时间片一般是HZ的倒数,HZ在编译的时候一般设置为1000,倒数也就是1ms,也就是每个进程的时间片是1ms(早年是10ms–HZ 为100的时候),如果进程1阻塞让出CPU进入调度队列,这个时候调度队列前还有两个进程2/3在排队,也就是最差会在2ms后才轮到1被调度执行。负载决定了排队等待调度队列的长短,如果轮到调度的进程已经ready那么性能没有浪费,反之如果轮到被调度但是没有ready(比如网络回包没到达)相当浪费了一次调度

sched_min_granularity_nsis the most prominent setting. In the original sched-design-CFS.txt this was described as the only “tunable” setting, “to tune the scheduler from ‘desktop’ (low latencies) to ‘server’ (good batching) workloads.”In other words, we can change this setting to reduce overheads from context-switching, and therefore improve throughput at the cost of responsiveness (“latency”).

The CFS setting as mimicking the previous build-time setting, CONFIG_HZ. In the first version of the CFS code, the default value was 1 ms, equivalent to 1000 Hz for “desktop” usage. Other supported values of CONFIG_HZ were 250 Hz (the default), and 100 Hz for the “server” end. 100 Hz was also useful when running Linux on very slow CPUs, this was one of the reasons given when CONFIG_HZ was first added as an build setting on X86.

或者参数调整:

1 | #sysctl -a |grep -i sched_ |grep -v cpu |

测试

How long does it take to make a context switch?

1 | model name : Intel(R) Xeon(R) Platinum 8269CY CPU @ 2.50GHz |

测试代码仓库:https://github.com/tsuna/contextswitch

Source code: timectxsw.c Results:

- Intel 5150: ~4300ns/context switch

- Intel E5440: ~3600ns/context switch

- Intel E5520: ~4500ns/context switch

- Intel X5550: ~3000ns/context switch

- Intel L5630: ~3000ns/context switch

- Intel E5-2620: ~3000ns/context switch

如果绑核后上下文切换能提速在66-45%之间

系统调用代价

Source code: timesyscall.c Results:

- Intel 5150: 105ns/syscall

- Intel E5440: 87ns/syscall

- Intel E5520: 58ns/syscall

- Intel X5550: 52ns/syscall

- Intel L5630: 58ns/syscall

- Intel E5-2620: 67ns/syscall

https://mp.weixin.qq.com/s/uq5s5vwk5vtPOZ30sfNsOg 进程/线程切换究竟需要多少开销?

1 | /* |

平均每次上下文切换耗时3.5us左右

软中断开销计算

下面的计算方法比较糙,仅供参考。压力越大,一次软中断需要处理的网络包数量就越多,消耗的时间越长。如果包数量太少那么测试干扰就太严重了,数据也不准确。

测试机将收发队列设置为1,让所有软中断交给一个core来处理。

无压力时 interrupt大概4000,然后故意跑压力,CPU跑到80%,通过vmstat和top查看:

1 | $vmstat 1 |

top看到 si% 大概为20%,也就是一个核25000个interrupt需要消耗 20% 的CPU, 说明这些软中断消耗了200毫秒

200*1000微秒/25000=200/25=8微秒,8000纳秒 – 偏高

降低压力CPU 跑到55% si消耗12%

1 | procs -----------memory---------- ---swap-- -----io---- -system-- ------cpu----- |

120*1000微秒/(21000)=5.7微秒, 5700纳秒 – 偏高

降低压力(4核CPU只压到15%)

1 | procs -----------memory---------- ---swap-- -----io---- -system-- ------cpu----- |

单核11000 interrupt,对应 si CPU 2.2%

22*1000/11000= 2微秒 2000纳秒 略微靠谱

超线程切换开销

最小,基本可以忽略,1ns以内

lmbench测试工具

lmbench的lat_ctx等,单位是微秒,压力小的时候一次进程的上下文是1540纳秒

1 | [root@plantegg 13:19 /root/lmbench3] |

协程对性能的影响

将WEB服务改用协程调度后,TPS提升50%(30000提升到45000),而contextswitch数量从11万降低到8000(无压力的cs也有4500)

1 | procs -----------memory---------- ---swap-- -----io---- -system-- ------cpu----- |

没开协程CPU有20%闲置打不上去,开了协程后CPU 跑到95%

结论

- 进程上下文切换需要几千纳秒(不同CPU型号会有差异)

- 如果做taskset 那么上下文切换会减少50%的时间(避免了L1、L2 Miss等)

- 线程比进程上下文切换略快10%左右

- 测试数据和实际运行场景相关很大,比较难以把控,CPU竞争太激烈容易把等待调度时间计入;如果CPU比较闲体现不出cache miss等导致的时延加剧

- 系统调用相对进程上下文切换就很轻了,大概100ns以内

- 函数调用更轻,大概几个ns,压栈跳转

- CPU的超线程调度和函数调用差不多,都是几个ns可以搞定

看完这些数据再想想协程是在做什么、为什么效率高就很自然的了