分析与思考——黄奇帆的复旦经济课笔记

这是一次奇特的读书经历,因为黄奇帆这本书的内容主要是17-19年的一些报告内容,里面给出了他的一些看法以及各种数据,所以在3年后的2021年来读的话,我能够搜索当前的数据来印证他的判断,这种穿越的感觉很好。

黄比较厉害的是思路、逻辑清晰,然后各种数据比较丰富,所以他对问题的判断和看法比较准确。另外一个准确的是任大炮。相较另外一些教授、专家就混乱无比了,比如易宪容

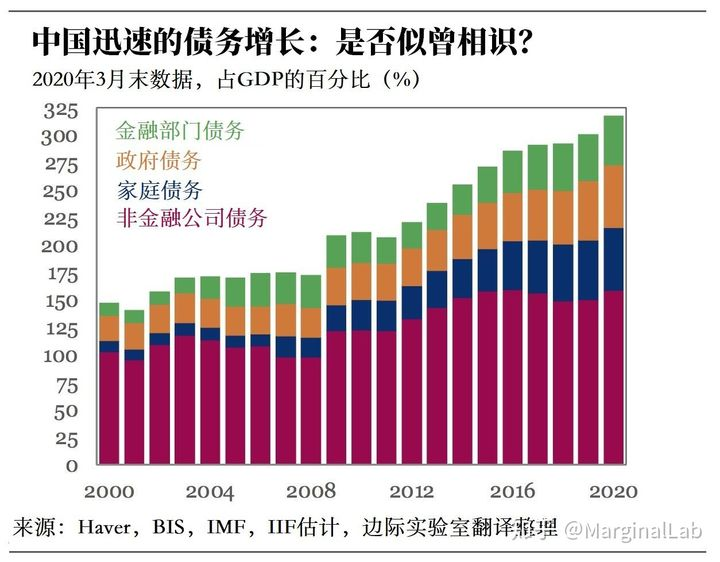

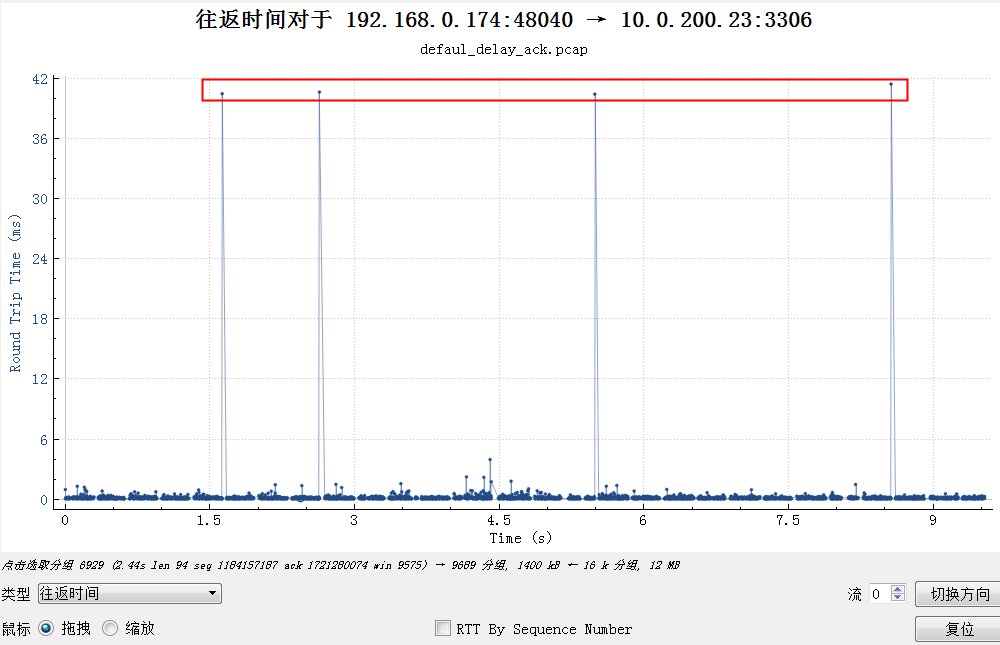

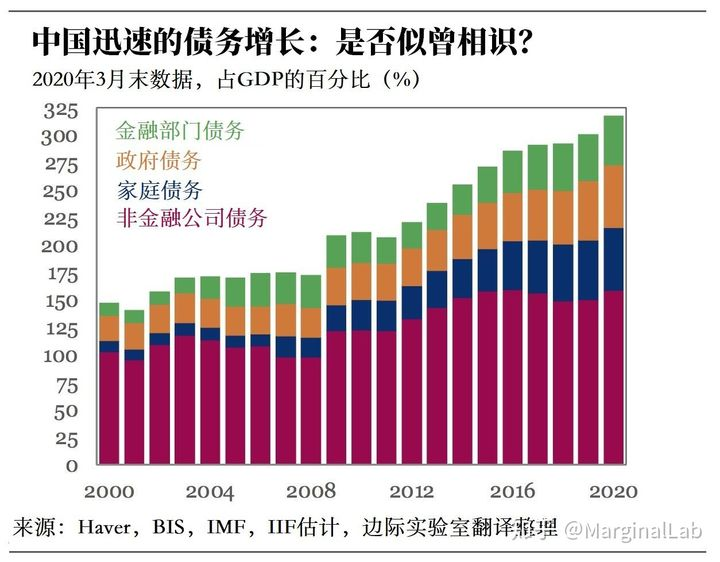

比如,去杠杆比他预估的要差多了,杠杆这几年不但没去成反而加大了;政府债务占比也没有按他的预期减少,稳中有增;房地产开工面积也在增加,当然增速很慢了,占GDP比重也在缓慢增加。

以下引用内容都是从网络搜索所得,其它内容为黄书中直接复制出来的,附录内容没有读。

去杠杆

- 国家M2。2017年中国M2已经达到170万亿元,这几个月下来到5月底已经是176万亿元,我们的GDP 2017年是82万亿元,M2与GDP之比已经是2.1∶1。美国的M2跟它的GDP之比是0.9∶1,美国GDP是20万亿美元,他们M2统统加起来,尽管已经有了三次(Q1、Q2、Q3)的宽松,现在的M2其实也就18万亿美元,所以我们这个指标就显然是非常非常的高。

- 我们国家金融业的增加值。2017年年底占GDP总量的7.9%,2016年年底是8.4%,2017年五六月份到了8.8%,下半年开始努力地约束金融业夸张性的发展或者说太高速的发展,把这个约束了一下,所以到2017年年底是7.9%,2018年1—5月份还是在7.8%左右。这个指标也是世界最高,全世界金融增加值跟全世界GDP来比的话,平均是在4%左右。像日本尽管有泡沫危机,从20世纪80年代一直到现在,基本上在百分之五点几。美国从1980年到2000年也是百分之五点几,2000年以来,一直到次贷危机才逐渐增加。2008年崩盘之前占GDP的百分之八点几。这几年约束了以后,现在是在7%左右。它是世界金融的中心,全世界的金融资源集聚在华尔街,集聚在美国,产生的金融增加值也就是7%,我们并没有把世界的金融资源增加值、效益利润集聚到中国来,中国的金融业为何能够占中国80多万亿元GDP的百分之八点几?中国在十余年前,也就是2005年的时候,金融增加值占当时GDP的5%不到,百分之四点几,快速增长恰恰是这些年异常扩张、高速发展的结果。这说明我们金融发达吗?不对,其实是脱实就虚,许多金融GDP把实体经济的利润转移过来,使得实体经济异常辛苦,从这个意义上说,这个指标是泡沫化的表现。

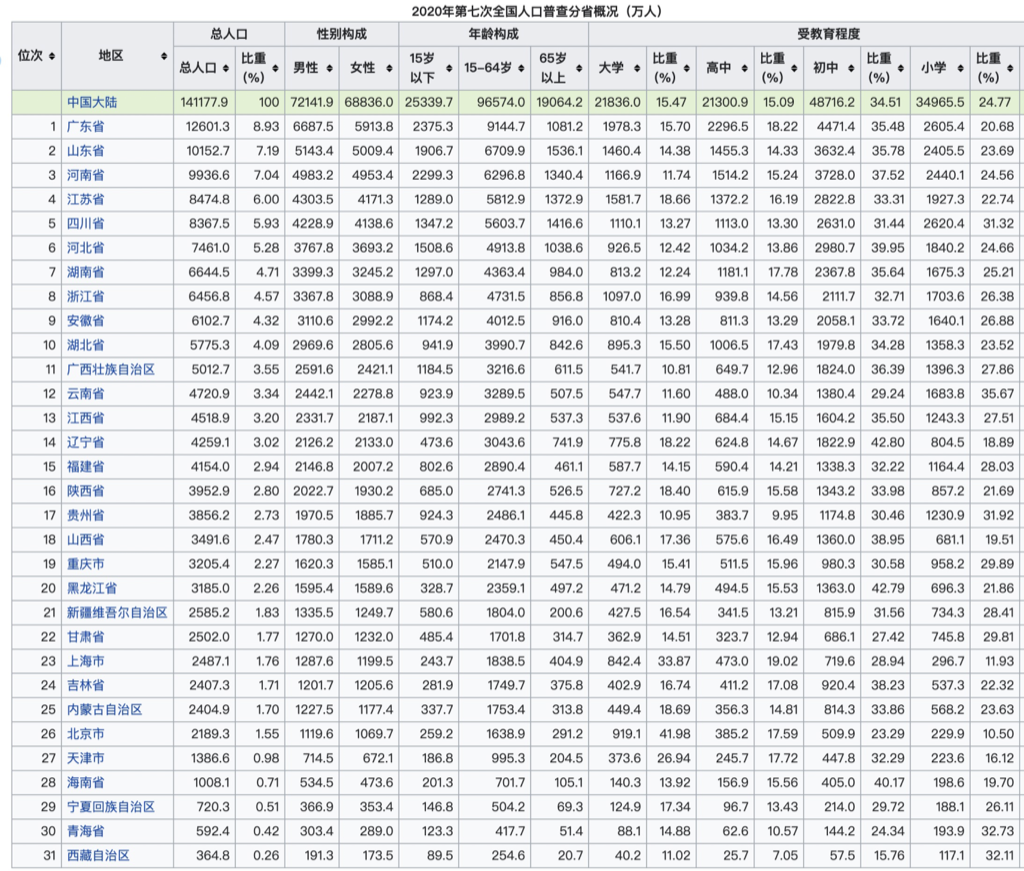

- 我们国家宏观经济的杠杆率。非银行非金融的企业负债,政府部门的负债(40%多),加上居民部门的负债(50%),三方面加起来是GDP的2.5倍,250%,在世界100多个国家里我们是前5位,是偏高的,我们跟美国相当,美国也是250%,日本是最高的,现在是440%,英国也比较高,当然欧洲一些国家,比如意大利或者西班牙,以及像希腊等一些债务财政出问题的小的国家,他们也异常的高。即使这样,我们的债务杠杆率排在世界前5位,也是异常的高。

- 每年全社会新增的融资。我们的企业每年都要融资,除了存量借新还旧,存量之外,有个增量,我们在十年前每年全社会新增融资量是五六万亿元,五年前新增的量在10万亿—12万亿元,2017年新增融资18万亿元。每年新增的融资里面,股权资本金性质的融资只占总的融资量的10%不到一点,也就是说91%是债权,要么是银行贷款,要么是信托,要么是小贷公司,或者直接是金融的债券。大家可以想象,如果每年新增的融资总是90%以上是债权,10%是股权的话,这个数学模型推它十年,十年以后中国的债务不会缩小,只会越来越高。

2021年4月末,广义货币(M2)余额226.21万亿元,同比增长8.1%,增速分别比上月末和上年同期低1.3个和3个百分点;狭义货币(M1)余额60.54万亿元,同比增长6.2%,增速比上月末低0.9个百分点,比上年同期高0.7个百分点;流通中货币(M0)余额8.58万亿元,同比增长5.3%。当月净回笼现金740亿元。

杠杆率是250%,在全世界来说是排在前面,是比较高的。这个指标里面又分成三个方面,其中政府的债务占GDP不到50%,国家统计公布的数据是40%多,但是有些隐性债务没算进去,就算算进去也不到50%。第二个方面是老百姓的债务,十年前还只占10%,五年前到了20%,我印象中有一年中国人民银行也说了,中国居民部门的债务还可以放一点杠杆,这两年按揭贷款异常发展起来,居民债务两年就上升到50%。老百姓这一块的债务,主要是房产的债务,也包括信用卡和其他投资,总的也占GDP50%左右。两个方面加起来就等于GDP,剩下的160%是企业债务,美国企业负债是美国GDP的60%,而中国企业的负债是GDP的160%,这个指标是有问题的

中国政府的40多万亿元债务是中央政府的债务有十几万亿元,地方政府的债务有20多万亿元,加在一起40多万亿元,占GDP 50%左右,我们是把区县、地市、省级政府到国家级统算在一起的。所以,我们中国政府的债务算得是比较充分的。

人民网北京2021年4月7日电 (记者王震)国务院新闻办公室4月7日就贯彻落实“十四五”规划纲要,加快建立现代财税体制有关情况举行发布会。财政部部长助理欧文汉介绍,截至2020年末,地方政府债务余额25.66万亿元,控制在全国人大批准的限额28.81万亿元之内,加上纳入预算管理的中央政府债务余额20.89万亿元,全国政府债务余额46.55万亿元,政府债务余额与GDP的比重为45.8%,低于国际普遍认同的60%警戒线,风险总体可控。

去企业负债杠杆方法:第一是坚定不移地把没有任何前途的、过剩的企业,破产关闭,伤筋动骨、壮士断腕,该割肿瘤就要割掉一块(5%)。第二是通过收购兼并,资产重组去掉一部分坏账,去掉一部分债务,同时又保护生产力(5%)。第三是优势的企业融资,股权融资从新增融资的10%增加到30%、40%、50%,这应该是一个要通过五年、十年实现的中长期目标。第四是柔性地、柔和地通货膨胀,稀释债务,一年2个点,五年就是10个点,也很可观。第五是在基本面上保持M2增长率和GDP增长率与物价指数增长率之和大体相当。我相信通过这五方面措施,假以时日,务实推进,那么有个三五年、近十年,我们去杠杆四五十个百分点的宏观目标就会实现。

怎么把股市融资和私募投资从10%上升到40%、50%,这就是我们中国投融资体制,金融体制要发生一个坐标转换。这里最重要的,实际上是两件事。第一件事,要把证券市场、资本市场搞好。十几年前上证指数两三千点,现在还是两三千点。美国股市指数翻了两番,香港股市指数也翻了两番。我国国民经济总量翻了两番,为什么股市指数不增长?这里面要害是什么呢?可以说有散户的结构问题,有长期资本缺乏的问题,有违规运作处罚不力的问题,有注册制不到位的问题,各种问题都可以说。归根到底最重要的一个问题是什么呢?就是退市制度没搞好。

供应侧结构化改革三去一降一补

供应侧结构化改革三去一降一补:去产能、去库存、去杠杆、降成本、补短板

中国所有的货物运输量占GDP的比重是15%,美国、欧洲都在7%,日本只有百分之五点几。我们占15%就比其他国家额外多了几万亿元的运输成本。中国交通运输的物流成本高,除了基础设施很大一部分是新建投资、折旧成本较高以外,相当大的部分是管理体制造成的。由于我们的管理、软件、系统协调性、无缝对接等方面存在很多问题,造成了各种物流成本抬高。在这个问题上,各个地方,各个系统,各个行业都把这方面问题重视一下、协调一下,人家7%,我们哪怕降不到7%的GDP占比,能够降3%—4%的占比,就省了3万亿—4万亿元

按国际惯例,个人所得税率一般低于企业所得税率,我国的个人所得税采取超额累进税率与比例税率相结合的方式征收,工资薪金类为超额累进税率5%—45%。最高边际税率45%,是在1950年定的,当时我国企业所得税率是55%,个人所得税率定在45%有它的理由。现在企业所得税率已经降到25%,个人所得税率还保持在45%,明显高于前者,也高于大多数国家25%左右的水平。

到2018年底,中国个人住房贷款余额25.75万亿元,而公积金个人住房贷款余额为4.98万亿元,在整个贷款余额中不到20%,其为人们购房提供低息贷款的功能完全可以交由商业银行按揭贷款来解决。可以考虑公积金合并为年金

互联网金融平台、物联网金融平台、物联网+金融形成的平台会在这里起颠覆性的、全息性的、五个全方位信息(全产业链的信息、全流程的信息、全空间布局的信息、全场景的信息、全价值链的信息)的配置作用

“三元悖论”,即安全、廉价、便捷三者不可能同时存在

鉴于互联网商业平台公司的商业模式已经远远超出传统商业规模所能达成的社会影响力,所以,互联网商业平台公司与其说是在从事商业经营,不如说是在从事网络社会的经营和管理。正因如此,国家有必要通过立法,构建一种由网络安全、金融安全、社会安全、财政安全等相关部门参加的“互联网技术研发信息日常跟踪制度”。

货币

“二战”后建立的“布雷顿森林体系”,即“美元与黄金挂钩,其他国家货币与美元挂钩”的“双挂钩”制度,其实质也是一种“金本位”制度,而1971年美国总统尼克松宣布美元与黄金脱钩也正式标志着美元放弃了以黄金为本位的货币制度,随之采取的是“主权信用货币制”

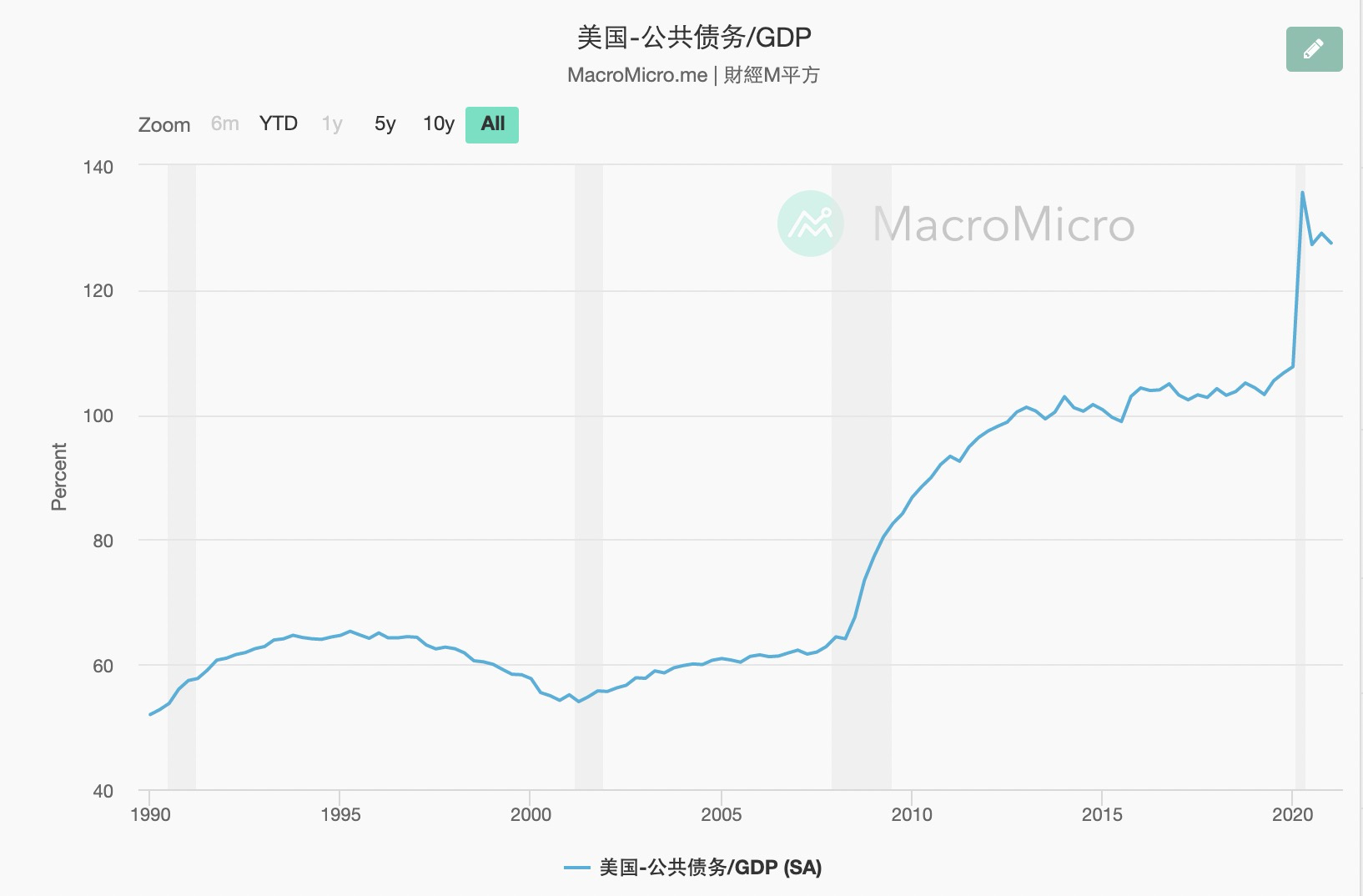

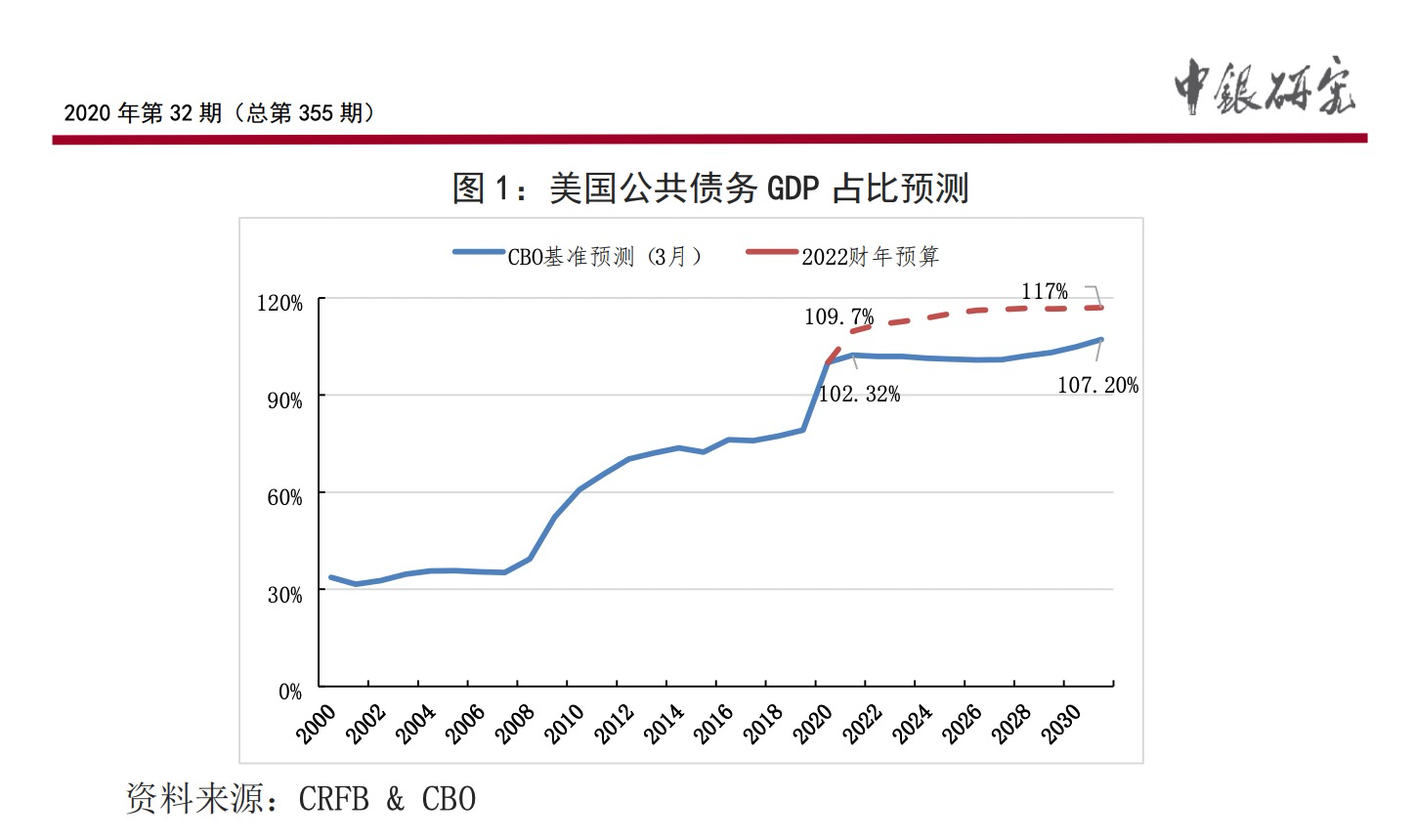

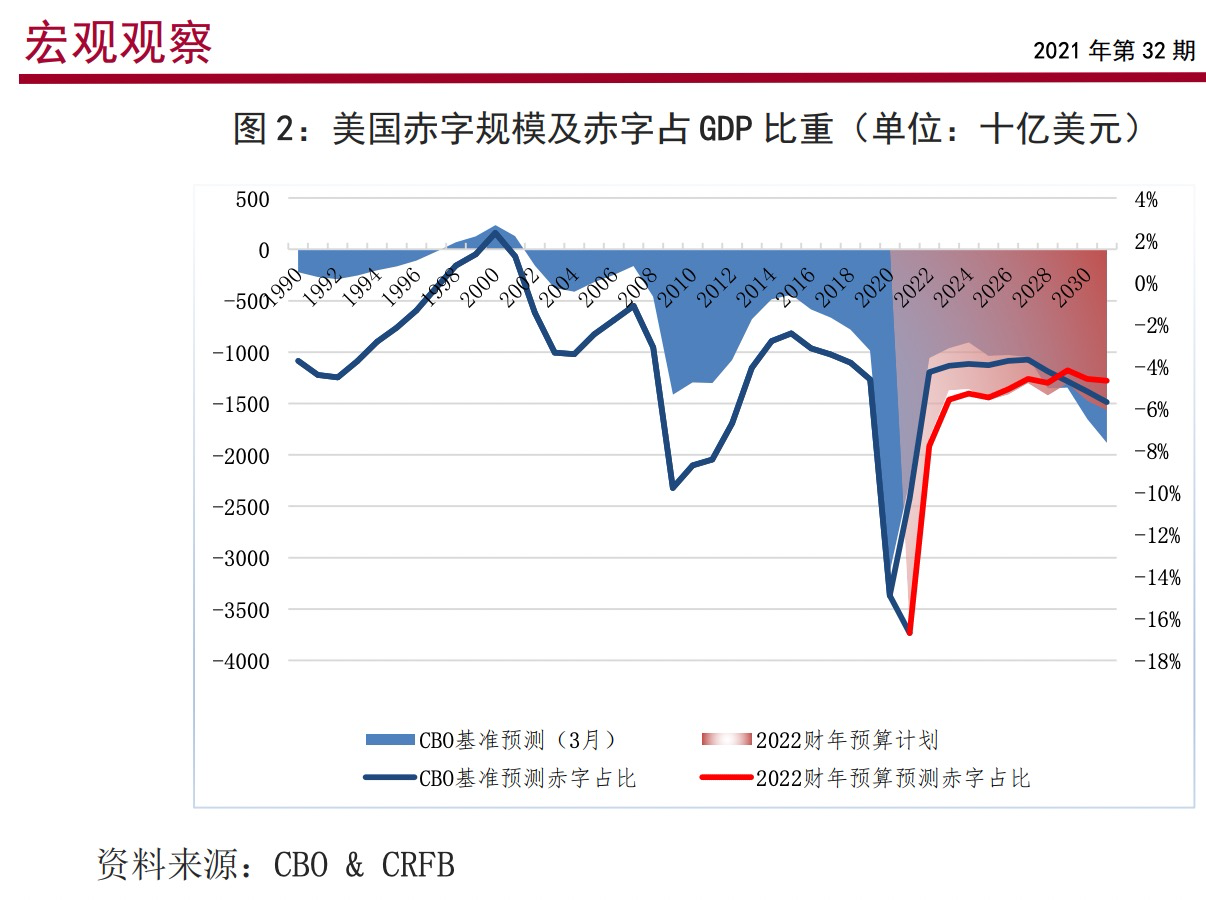

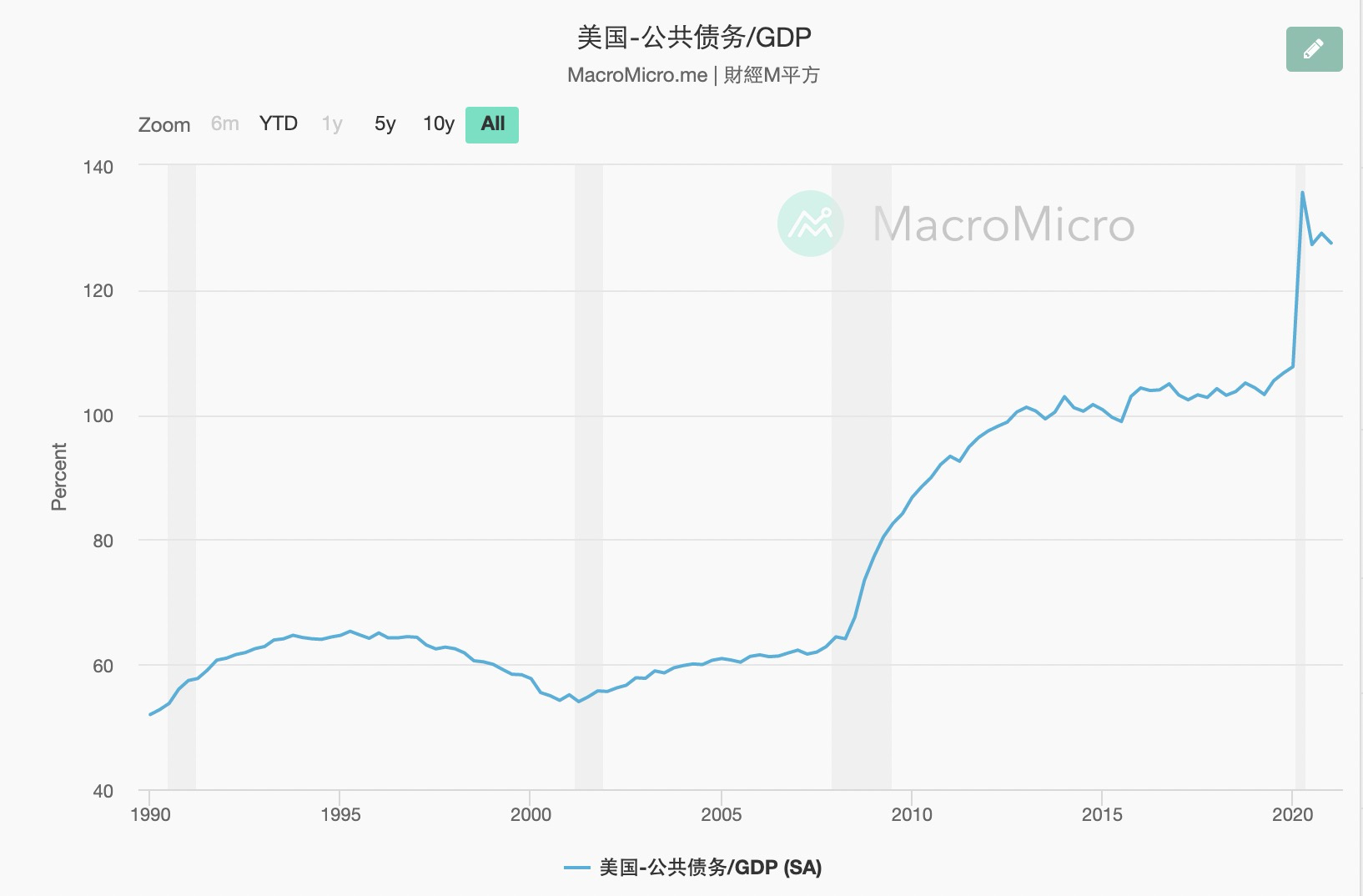

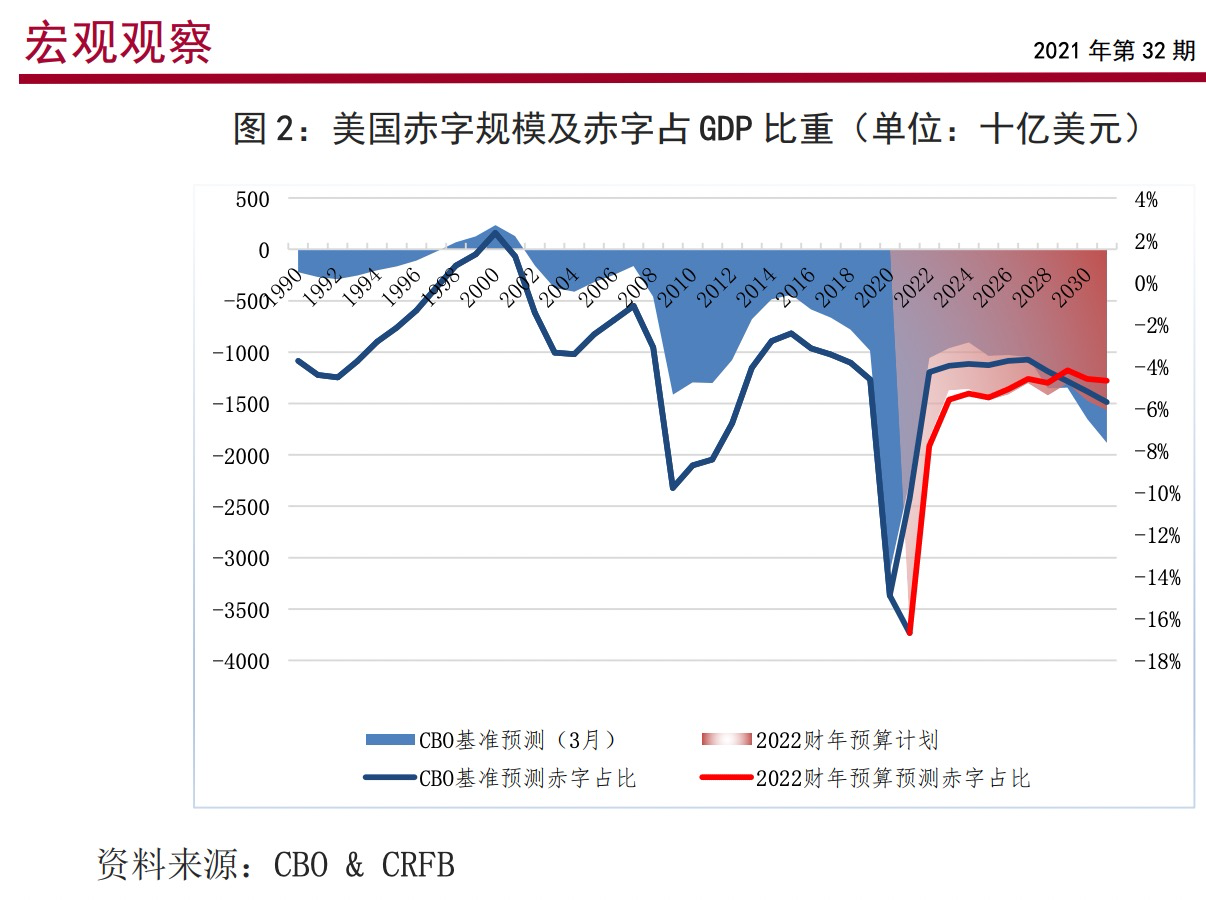

近十余年来,美国为了摆脱金融危机,政府债务总量从2007年的9万亿美元上升到2019年的22万亿美元,已经超过美国GDP

从1970年开始,欧美、日本等大部分世界发达国家逐步采用了“主权信用货币制”,在实践中总体表现较好,货币的发行量与经济增量相匹配,保持了经济的健康增长和物价的稳定。这种货币制度通常以M3、经济增长率、通胀率、失业率等作为“间接锚”,并不是完全的无锚制货币。

从1970年到2008年,美国政府在应用主权信用货币制度的过程中基本遵守货币发行纪律,货币的增长与GDP增长、政府债务始终保持适度的比例。1970年,美国基础货币约为700亿美元,2007年底约为8200亿美元,大约增长了12倍。与此同时,美国GDP从1970年的1.1万亿美元增长到2007年的14.5万亿美元,大概增长了13倍。美元在全世界外汇储备中的占比稳定在65%以上

从2008年年底至2014年10月,美联储先后出台三轮量化宽松政策,总共购买资产约3.9万亿美元。美联储持有的资产规模占国内生产总值的比例从2007年年底的6.1%大幅升至2014年年底的25.3%,资产负债表扩张到前所未有的水平。

从2008年到2019年,美国基础货币供应量从8200亿美元飙升到4万亿美元,整体约增长了5倍,与此同时,美国GDP仅增长了1.5倍,基础货币的发行增速几乎是同期GDP增速的3倍以上。在这种货币政策的驱动下,美国股市开启了十年长牛之路,股市从6000点涨到28000点。各类资产价格开始重新走上上涨之路,美国经济沉浸在一片欣欣向荣之中。

1913年美国《联邦储备法案》规定,美元的发行权归美联储所有。美国政府没有发行货币的权力,只有发行国债的权力。但实际上,美国政府可以通过发行国债间接发行货币。美国国会批准国债发行规模,财政部将设计好的不同种类的国债债券拿到市场上进行拍卖,最后拍卖交易中没有卖出去的由美联储照单全收,并将相应的美元现金交给财政部。这个过程中,财政部把国债卖给美联储取得现金,美联储通过买进国债获得利息,两全其美,皆大欢喜。

2008年前美国以国家信用为担保发行美债,美债余额始终控制在GDP的70%比例之内,国家信用良好。美债作为全世界交易规模最大的政府债券,长久以来保持稳定的收益,成为黄金以外另一种可靠的无风险资产。美国的货币供给总体上与世界经济的需求也保持着适当的比例,进一步加强了美元的信用。

美国GDP占全球GDP的比重已经从50%下降到24%,但美元仍然是主要的国际交易结算货币。尽管近年来美元在国际储备货币中的占比逐渐从70%左右滑落到62%,但美元的地位短时间内仍然看不到动摇的迹象

布雷顿森林体系解体后,各国以美元为货币“名义锚”的强制性随之弱化,但在自由选择条件下,绝大多数发展中国家仍然选择美元为“名义锚”,实行了锚定美元,允许一定浮动的货币调控制度。另有一些发展中国家选择锚定原来的宗主国,以德国马克、法国法郎和英镑等货币为“名义锚”。而主要的发达国家在货币寻锚的过程中,经历了一些波折之后,大多选择以“利率、货币发行量、通货膨胀率”等指标作为货币发行中间目标,实际上锚定的是国内资产。总之,从当前来看,世界的货币大致形成了两类发行制度:以“其他货币”为名义锚的货币发行体制和以“本国资产”为名义锚的货币发行体系,也称为主权信用货币制度。

香港采用的货币制度很独特,被称为“联系汇率”制度,又被称为“钞票局”制度,据说是19世纪一位英国爵士的发明。其基本内容是香港以某一种国际货币为锚(20世纪70年代以前以英镑为本位,80年代后改以美元为本位),即以该货币为储备发行港币,通过中央银行吞吐储备货币来达到稳定本币汇率的目标。在这种货币制度下,不仅需要储备相当规模的锚货币,其还有一个重大缺陷是必须放弃独立的货币政策,即本位货币加降息时,其也必须跟随。因此,这种货币制度只适用于小国或者小型经济体,对大国或大型经济体则不适用。

从新中国成立到如今,人民币发行制度经历了从“物资本位制”到“汇兑本位制”两个阶段,这两种不同时期实施的货币制度在当时都有效地促进了国民经济的发展。1995年以后,面对新的形势,中国人民银行探索通过改革实行了新的货币制度——“汇兑本位制”,即通过发行人民币对流入的外汇强制结汇,人民币汇率采取盯住美元的策略,从而保持人民币的汇率基本稳定。

在“汇兑本位制”下,我国主要有两种渠道完成人民币的发行。第一种,当外资到我国投资时,需要将其持有的外币兑换成人民币,这就是被结汇。结汇以后,在中国人民银行资产负债表上,一边增加了外汇资产,另一边增加了存款准备金的资产,这实际上就是基础货币发出的过程。中央银行的资产负债表中关于金融资产分为两部分,一部分是外币资产,另一部分是基础货币。基础货币包括M0和存款准备金,而这部分的准备金就是因为外汇占款而出现的。

第二种则是贸易顺差。中国企业由于进出口业务产生贸易顺差,实际上是外汇结余。企业将多余的外汇卖给商业银行,再由央行通过发行基础货币来购买商业银行收到的外汇。商业银行收到央行用于购买外汇的基础货币,就会通过M0流入社会。长此以往,就会增加通货膨胀的风险。央行为规避通货膨胀的风险,就会通过提高准备金率将多出的基础货币回收。

在“汇兑本位制”下,外汇储备可以视作人民币发行的基础或储备,且由于实行强制结汇,外汇占款逐渐成为我国基础货币发行的主要途径,到2013年末达到83%的峰值,此后略有下降,截至2019年7月末,外汇占款占中国人民银行资产总规模达到59.35%,说明有近六成人民币仍然通过外汇占款的方式发行。

现代货币理论(缩写MMT)是一种非主流[1]宏观经济学理论,认为现代货币体系实际上是一种政府信用货币体系。[2] 现代货币理论即主权国家的货币并不与任何商品和其他外币挂钩,只与未来税收与公债相对应。[3]因为主权货币具有无限法偿性质,没有名义预算约束,只存在通货膨胀的实际约束。–基本上就是:主权信用货币制度

“汇兑本位制”的实质:锚定美元

我国的人民币汇率制度基于两个环节。第一,人民币的汇率是人民币和外币之间的交换比率,是人民币与一篮子货币形成的一个比价。我国在同其他国家进行投资、贸易时,人民币按照汇率进行兑换。由于美元是目前世界上最主要的货币,所以虽然人民币与一篮子货币形成相对均衡的比价,但由于美元在一篮子货币中占有较大的比重,人民币最重要的比价货币是美元。第二,我国实行结汇制,即我国的商业银行、企业,基本上不能保存外汇,必须将收到的外汇卖给央行。因此,由于我国的货币发行的基础是外汇,而美元在我国的外汇占款、一篮子货币中占比较高,因此可以说人民币是间接锚定美元发行的。

截至2018年12月底,中国人民银行资产总规模为37.25万亿元,其中外汇占款达21.25万亿元。外汇占款在货币发行中的份额已经从2013年的83%降低至2008年初的57%左右。与此同时,央行对其他存款性公司债权迅速扩张,从2014年到2016年底扩张了2.4倍,占总资产份额从7.4%升至24.7%。这说明了随着外汇占款成为基础货币回笼而非投放的主渠道,央行主要通过公开市场操作和各类再贷款便利操作购买国内资产来投放货币,不失为在外汇占款不足的情况下供给货币的明智选择。同时,央行连续降低法定存款准备金率,提高了货币乘数,一定程度上也缓解了国内流动性不足的问题。

外汇占款在货币发行存量中的比重仍然接近60%,只是通过一些货币政策工具缓解了原来“汇兑本位制”的问题。一旦日后出现大量贸易顺差导致外汇储备增加,货币发行制度就会又回到老路上。

实施“主权信用货币制度”是大国崛起的必然选择

从根本上来说,税收是货币的信用,财政可以是货币发行的手段,而且是最高效公平的手段,央行买国债是能够自主收放的货币政策手段。一旦货币超发后,央行只需要提高利率、提高存款准备金率回收基础货币,而财政部门也可以通过增加税收、注销政府债券的方式来消除多余的货币、避免通货膨胀。

实际上,信用货币制度最大的问题在于锚的不清晰、不稳定,缺乏刚性。

特别提款权(Special Drawing Right,SDR),亦称“纸黄金”(Paper Gold),最早发行于1969年,是国际货币基金组织根据会员国认缴的份额分配的,可用于偿还国际货币基金组织债务、弥补会员国政府之间国际收支逆差的一种账面资产。 其价值由美元、欧元、人民币、日元和英镑组成的一篮子储备货币决定。

主权信用货币背景下,人民币是由国债做锚的。中央银行为了发行基础货币,需要购买财政部发行的国债,但中央银行不能购买财政部为了弥补财政亏空发行的国债。《中华人民共和国中国人民银行法》规定,中国人民银行不得直接认购、包销国债和其他政府债券。这意味着中国人民银行不能以政府的债权作为抵押发行货币,只能参与国债二级市场的交易而不能参与国债一级市场的发行,央行直接购买国债来发行基础货币的方式就被法律禁止了。因此,建立以国债为基础的人民币发行制度,必须对相关法律法规进行修改。

2017年5月 房地产

中国房地产和实体经济存在“十大失衡”——土地供求失衡、土地价格失衡、房地产投资失衡、房地产融资比例失衡、房地产税费占地方财力比重失衡、房屋销售租赁比失衡、房价收入比失衡、房地产内部结构失衡、房地产市场秩序失衡、政府房地产调控失衡

土地调控得当、法律制度到位、土地金融规范、税制结构改革和公租房制度保障,并特别强调了“地票制度”对盘活土地存量,提高耕地增量的重要意义

为国家粮食战略安全计,我国土地供应应逐步收紧,2015年供地770万亩,2016年700万亩,今年计划供应600万亩

国家每年批准供地中,约有三分之一用于农村建设性用地,比如水利基础设施、高速公路等,真正用于城市的只占三分之二,这部分又一分为三:55%左右用于各种基础设施和公共设施,30%左右给了工业,实际给房地产开发的建设用地只有15%。这是三分之二城市建设用地中的15%,摊到全部建设用地中只占到10%左右,这个比例是不平衡的

对于供过于求的商品,哪怕货币泛滥,也可能价格跌掉一半。货币膨胀只是房价上涨的必要条件而非充分条件,只是外部因素而非内部因素。内因只能是供需关系

住房作为附着在土地上的不动产,地价高房价必然会高,地价低房价自然会低,地价是决定房价的根本性因素。如果只有货币这个外因存在,地价这个内因不配合,房价想涨也是涨不起来的。控制房价的关键就是要控制地价。

拍卖机制,加上新供土地短缺,旧城改造循环,这三个因素相互叠加,地价就会不断上升—核心加大供地可解地价过高

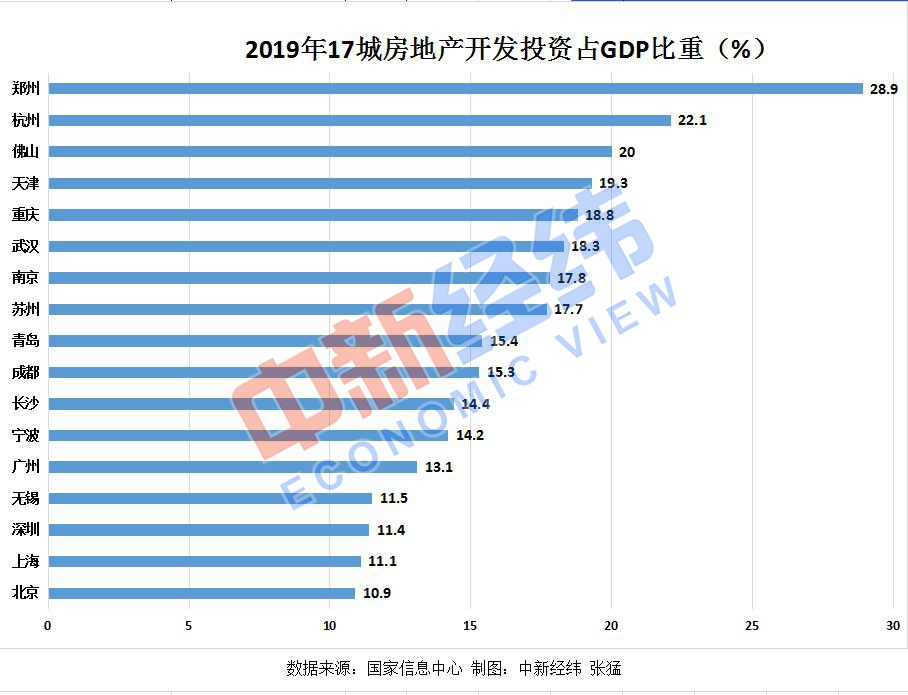

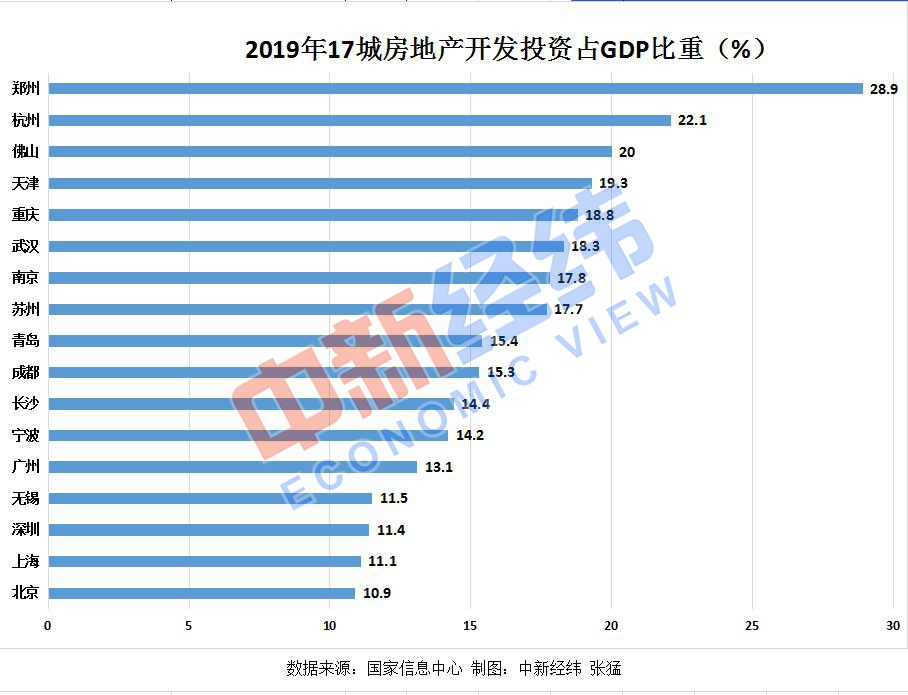

按经济学的经验逻辑,一个城市的固定资产投资中房地产投资每年不应超过25%

正常情况下,一个家庭用于租房的支出最好不要超过月收入的六分之一,超过了就会影响正常生活。买房也如此,不能超过职工全部工作年限收入的六分之一,按每个人一生工作40年左右时间算,“6—7年的家庭年收入买一套房”是合理的。—-中国每个人体制外算20年工作时间,体制内可算35年

从均价看,一线城市北京、上海、广州、深圳、杭州等,房价收入比往往已到40年左右。这个比例在世界已经处于很高的水平了。考虑房价与居民收入比,必须高收入对高房价,低收入对低房价,均价对均价。有人说,纽约房子比上海还贵,伦敦海德公园的房价也比上海高。但伦敦城市居民的人均收入要高出上海几倍。就均价而言,伦敦房价收入比还是在10年以内。

每年固定资产投资不应超过GDP的60%。如果GDP有1万亿元,固定资产投资达到1.3万亿元甚至1.5万亿元,一年两年可以,长远就会不可持续。固定资产投资不超过GDP的60%,再按“房地产投资不超过固定资产投资的25%”,也符合“房地产投资不超过GDP六分之一”这一基本逻辑。

大陆31个省会城市和直辖市中,房地产投资连续多年占GDP 60%以上的有5个,占40%以上的有16个,显然偏高

房地产17-18w亿,大概8.5w亿是直接留给卖地的地方政府

之后3-4w亿是各种建筑商供应商的辛苦钱

还有1w亿+流向的银行贷款的利息

1w亿+是各种非银金融机构,如信托和平安保险等

之后又是一轮税收,然后才是房地产商和房地产人

搞死房地产也许容易,但是你得指条明路,让这些人找到新地方做业务活着啊..

上海易居房地产研究院3月9日发布《2019年区域房地产依赖度》。该报告显示:房地产开发投资占GDP比重可以用来衡量当地经济对房地产的依赖程度,占比越高,说明经济对房地产的依赖度越高。2019年,房地产开发投资占GDP比重排名前三位的省市分别是海南、天津和重庆,占比分别为25.2%、19.3%和18.8%。

2020年杭州市GDP总量达到16106亿元,比2019年增长3.9%

有15个城市去年房地产开发投资额超过1000亿元,其中超过2000亿元的共8个,分别是杭州、郑州、广州、武汉、成都、南京、西安和昆明,杭州、郑州、广州三城更是超过了3000亿元。杭州以3397.27亿元在26城中位居榜首

2019年,除了杭州土地出让金继续领跑外,数据显示南京的卖地收入达到了1696.8亿元,同比增长77.32%,福州增幅为63.37%,昆明为59.85%,武汉为27.89%。去年土地出让金额没有超过1000亿元的城市中,合肥土地出让收入增长了33.32%,长沙增长了33.75%,贵阳增长了52%。

2020年,GDP前10强的城市依次为:上海、北京、深圳、广州、重庆、苏州、成都、杭州、武汉、南京。

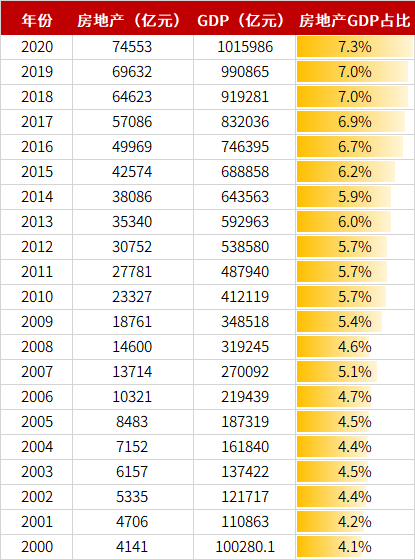

2011年,全国人民币贷款余额54.8万亿元,其中房地产贷款余额10.7万亿元,占比不到20%。这一比例逐年走高,2016年全国106万亿元的贷款余额中,房地产贷款余额26.9万亿元,占比超过25%。也就是说,房地产占用了全部金融资金量的25%,而房地产贡献的GDP只有7%左右。2016年全国贷款增量的45%来自房地产,一些国有大型银行甚至70%—80%的增量是房地产。从这个意义上讲,房地产绑架了太多的金融资源,导致众多金融“活水”没有进入到实体经济,就是“脱实就虚”的具体表现。

这些年,中央加地方的全部财政收入中,房地产税费差不多占了35%,乍一看来,这一比例感觉还不高。但考虑到房地产税费属地方税、地方费,和中央财力无关,把房地产税费与地方财力相比较,则显得比重太高。全国10万亿元地方税中,有40%也就是4万亿是与房地产关联的,再加上土地出让金3.7万亿元,全部13万亿元左右的地方财政预算收入中就有近8万亿元与房地产有关(60%)。政府的活动太依赖房地产,地方政府财力离了房地产是会断粮的,这也是失衡的。

一般中等城市每2万元GDP造1平方米就够了,再多必过剩。对大城市而言,每平方米写字楼成本高一些,其资源利用率也会高一些,大体按每平方米4万元GDP来规划。

一个城市的土地供应总量一般可按每人100平方米来控制,这应该成为一个法制化原则。100万城市人口就供应100平方千米。爬行钉住,后发制人。

人均100平方米的城市建设用地,该怎么分配呢?不能都拿来搞基础设施、公共设施,也不能都拿来搞商业住宅。大体上,应该有55平方米用于交通、市政、绿地等基础设施和学校、医院、文化等公共设施,这是城市环境塑造的基本需要。对工业用地,应该控制在20平方米以内,每平方千米要做到100亿元产值。剩下的25平用于房地产开发

房产税应包括五个要点:(1)对各种房子存量、增量一网打尽,增量、存量一起收;(2)根据房屋升值额度计税,如果1%的税率,价值100万元的房屋就征收1万元,升值到500万元税额就涨到5万元;(3)越高档的房屋持有成本越高,税率也要相对提高;(4)低端的、中端的房屋要有抵扣项,使得全社会70%—80%的中低端房屋的交税压力不大;(5)房产税实施后,已批租土地70年到期后可不再二次缴纳土地出让金,实现制度的有序接替。这五条是房产税应考虑的基本原则。

房地产调控的长效机制:一是金融;二是土地;三是财税;四是投资;五是立法。

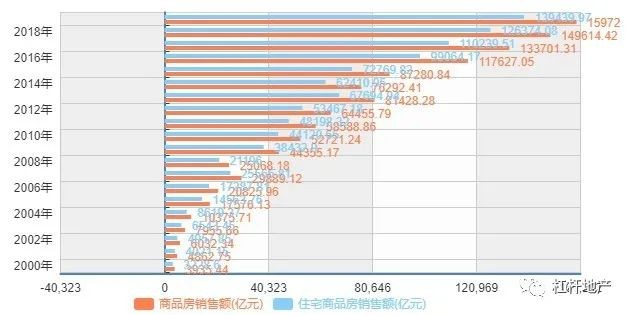

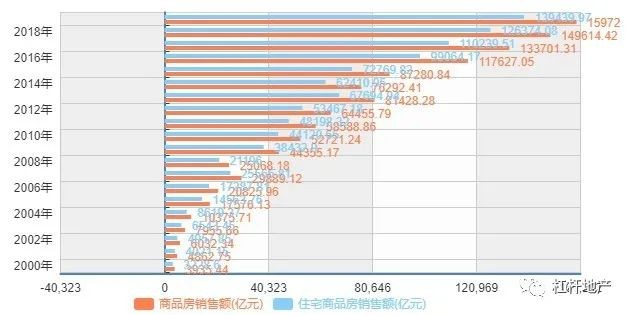

在1990年之前,中国是没有商品房交易的,那时候一年就是1000多万平方米。在1998年和1999年的时候,中国房地产一年新建房的交易销售量实际上刚刚达到1亿平方米。从1998年到2008年,这十年里平均涨了6倍,有的城市实际上涨到8倍以上,十年翻三番。2007年,销售量本来已经到了差不多7亿平方米,2008年全球金融危机发生了,在这个冲击下,中国的房产交易量也下降了,萎缩到6亿平方米。后来又过了5年,到了2012年前后,房地产的交易量翻了一番,从6亿平方米增长到12亿平方米。从2012年到2018年,又增加了5亿平方米。总之在过去的20年,中国房地产每年的新房销售交易量差不多从1亿平方米增长到17亿平方米,翻了四番多。

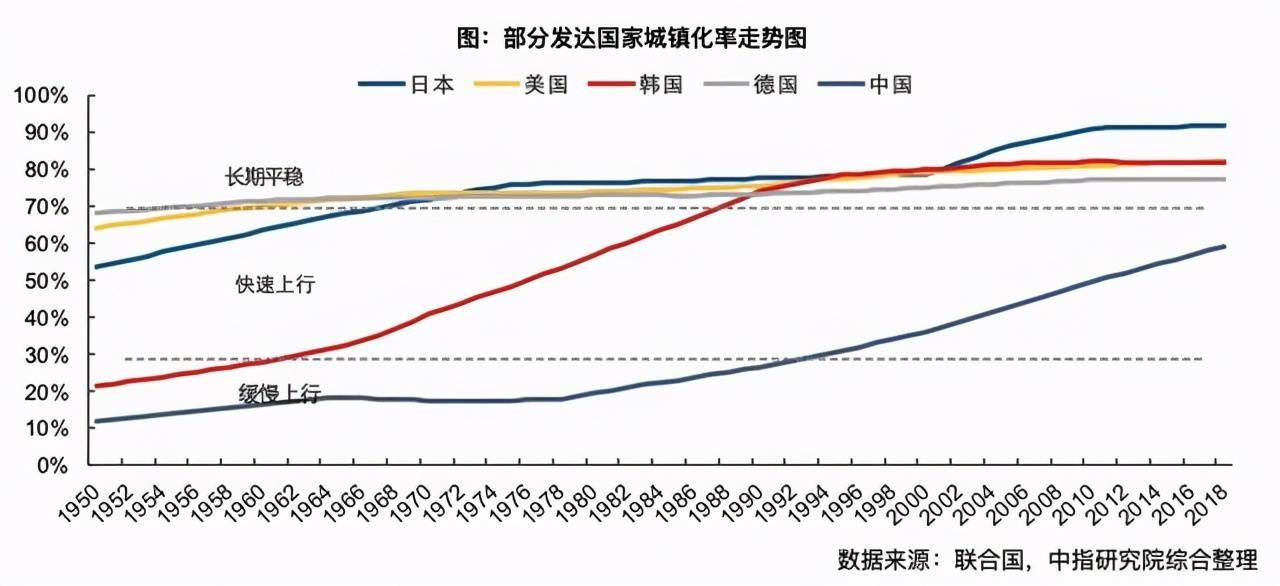

今后十几年,中国每年的房地产新房的交易量不仅不会继续增长翻番,还会每年小比例地有所萎缩,或者零增长,或者负增长。十几年以后,每年房地产的新房销售交易量可能下降到10亿平方米以内,大体上减少40%的总量。

今后十几年的房地产业发展趋势,不会是17亿平方米、18亿平方米、20亿平方米、30亿平方米,而是逐渐萎缩,当然这个萎缩不会在一年里面大规模萎缩20%、30%,大体上有十几年的过程,每年往下降。十几年后产生的销售量下降到10亿平方米以下

http://www.ce.cn/cysc/fdc/fc/202101/18/t20210118_36234860.shtml#:~:text=%E5%BD%93%E6%97%A5%E5%8F%91%E5%B8%83%E7%9A%84%E6%95%B0%E6%8D%AE%E6%98%BE%E7%A4%BA,%E7%9A%8415.97%E4%B8%87%E4%BA%BF%E5%85%83%E3%80%82: 2020年,中国商品房销售面积176086万平方米,比上年增长2.6%,2019年为下降0.1%。 商品房销售额173613亿元,增长8.7%,增速比上年提高2.2个百分点。 此前,中国商品房销售面积和销售额的最高纪录分为2018年的近17.17亿平方米和2019年的15.97万亿元。

1990年,中国人均住房面积只有6平方米;到2000年,城市人均住房面积也仅十几平方米,现在城市人均住房面积已近50平方米。人均住房面积偏小,也会产生改善性的购房需求。

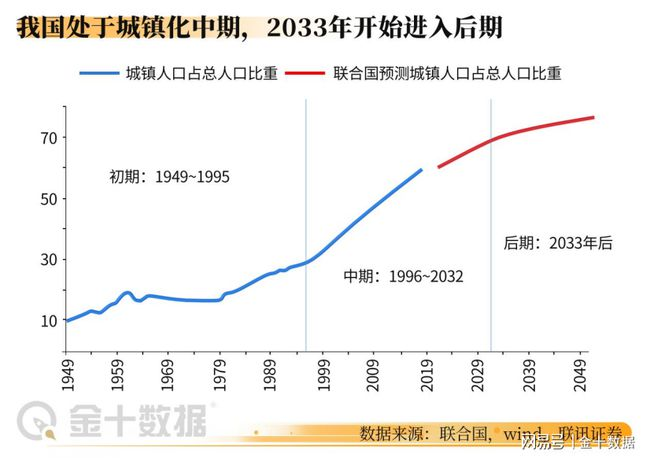

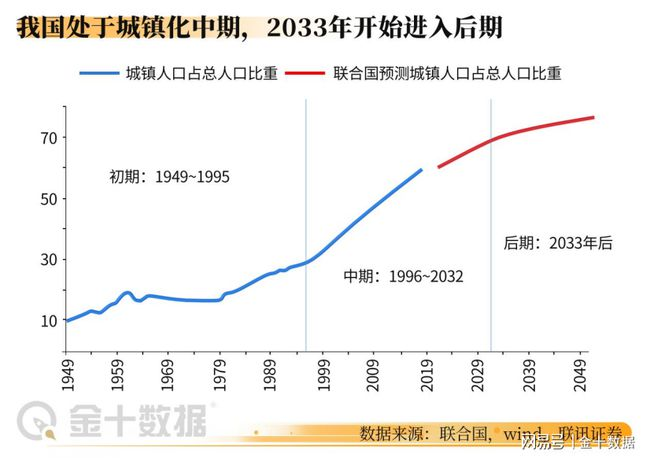

根据国家统计局公布的数据,1982年至2019年,我国常住人口城镇化率从21.1%上升至60.6%,上升超过39个百分点;同期,户籍人口城镇化率仅从17.6%上升至44.4%,上升不到27个百分点。

经济日报-中国经济网北京2月28日讯国家统计局网站2月28日发布我国2020年国民经济和社会发展统计公报。 公报显示,2020年末,我国常住人口城镇化率超过60%。Feb 28, 2021

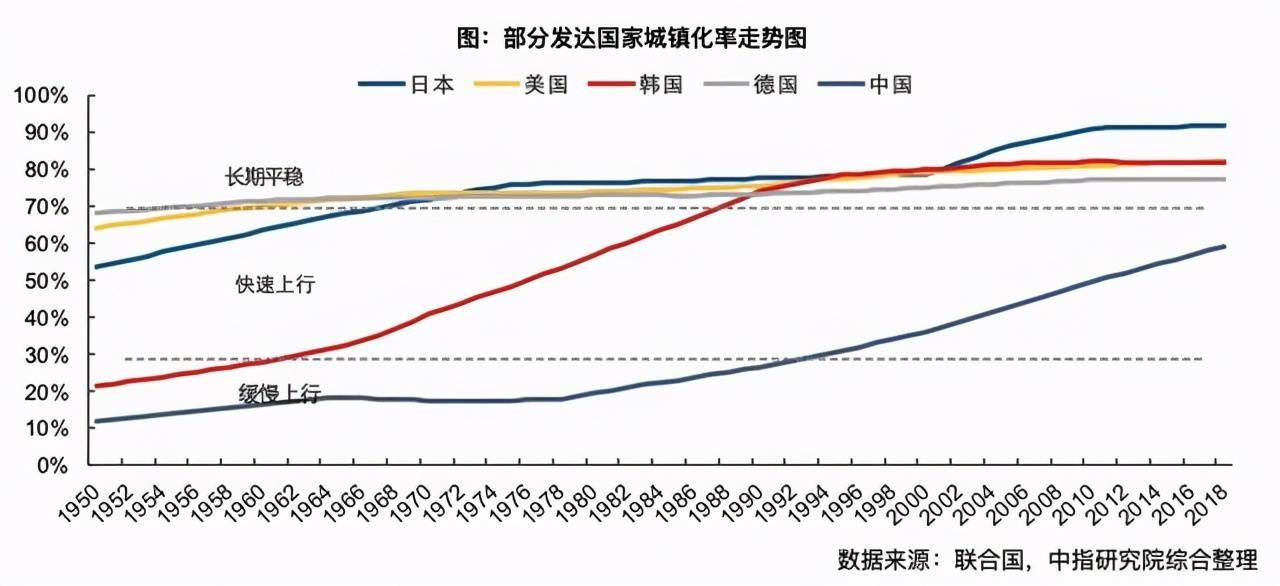

官方数据显示,2020年,我国的城镇化率高达63.89%,比发达国家80%的平均水平低了16.11%,与美国82.7%的城镇化水平还有18.81%的距离。

当前我国人均住房面积已经达到近50平方米

2012年,住建部下发了一个关于住宅和写字楼等各种商品性房屋的建筑质量标准,把原来中国住宅商品房30年左右的安全标准提升到了至少70年,甚至100年。这意味着从2010年以后,新建造的各种城市商品房,理论上符合质量要求的话,可以使用70年到100年,这也就是说老城市的折旧改造量会大量减少。

实际据说12年后因为利润率的原因房子质量在下降?!待证

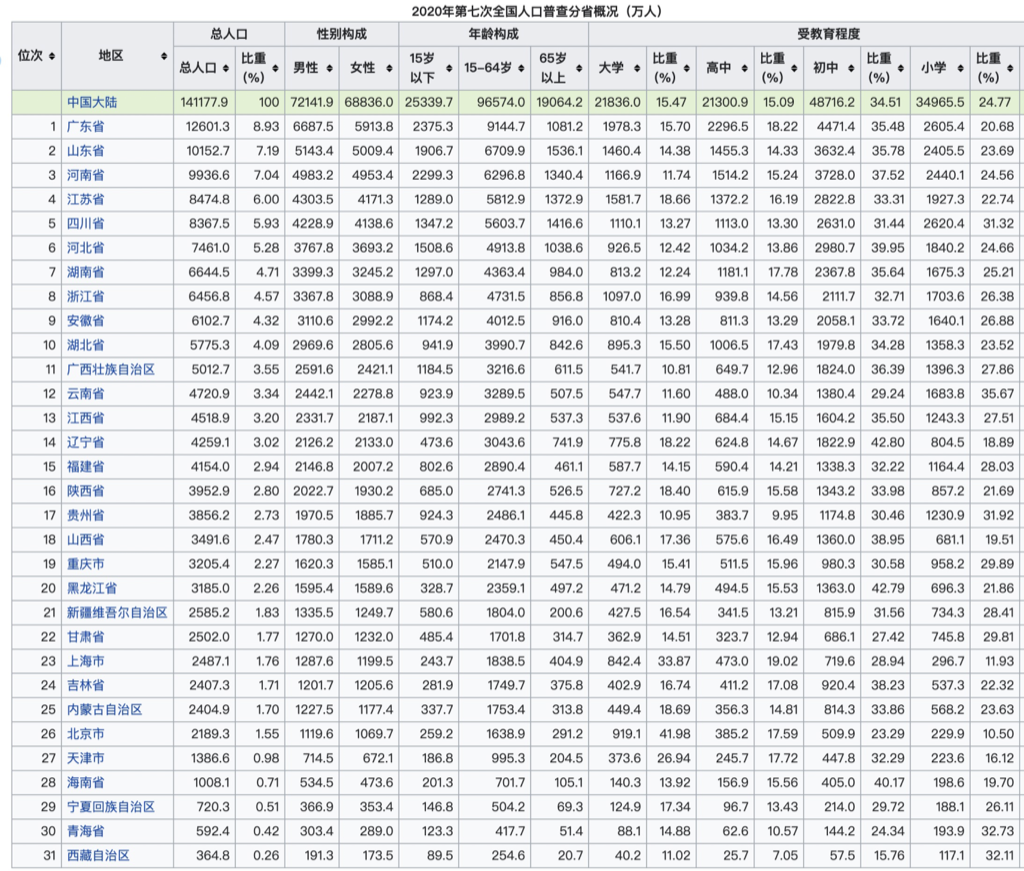

中国各个省的省会城市大体上发展规律都会遵循“一二三四”的逻辑。所谓“一二三四”,就是这个省会城市往往占有这个省土地面积的10%不到,一般是5%—10%;但是它的人口一般会等于这个省总人口的20%;它的GDP有可能达到这个省总GDP的30%;它的服务业,不论是学校、医院、文化等政府主导的公共服务,还是金融、商业、旅游等市场化的服务业,一般会占到这个省总量的40%。

河南省有1亿人口,郑州目前只有1000万人口。作为省会城市,应承担全省20%的人口,所以十几年、20年以后郑州发展成2000万人口一点不用惊讶。同样的道理,郑州的GDP现在到了1万亿元,整个河南5万亿元,它贡献了20%,如果要30%的话应该是1.5万亿元,还相差甚远。对于服务业,一个地方每100万人应该有一个三甲医院,如果河南省1亿人口要有100个的话,郑州就应该有40个,它现在才9个三甲医院,每造一个三甲医院投资20多亿元,产生的营业额也是20多亿元,作为服务业,营业额对增加值贡献比率在80%以上。

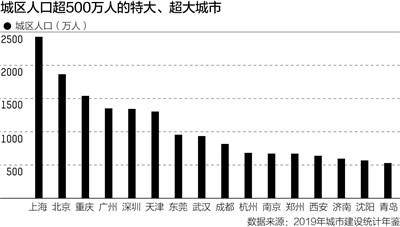

大家可以关注现在近十个人口超过1000万的国家级超级大城市,根据这些省总的经济人口规模去算一下,它们都有十几年以后人口增长500万以上的可能。只要人口增长了,城市住宅房地产就会跟上去。所以我刚才说的大都市、超级大城市,人口在1000万—2000万之间的有一批城市还会扩张,过了2000万的,可能上面要封顶,但是在1000万—2000万之间的不会封顶,会形成它的趋势。

在今后的十几年,房地产开发不再是四处开花,而会相对集聚在省会城市及同等级区域性中心城市、都市圈中的中小城市和城市群中的大中型城市三个热点地区。

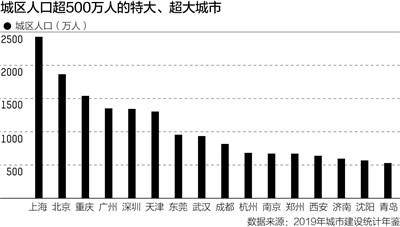

根据住房和城乡建设部于2020年底最新公布的《2019年城市建设统计年鉴》,符合中国“超大城市”标准的共有上海、北京、重庆、广州、深圳、天津。

东莞、武汉、成都、杭州、南京、郑州、西安、济南、沈阳和青岛这10个城市的城区人口处于500万到1000万之间,属于特大城市。Jan 12, 2021

根据住建部最新数据,2019年底,长沙城区人口384.75万人,建成区面积377.95平方公里。 与2018年的374.43万人相比,2019年底长沙城区人口增加约10万人。 注意,这个城区的统计范围包括雨花、岳麓、芙蓉、天心、开福、望城六区,应该不包括长沙县,因为长沙县目前不是设区市,也不是县级市。Jan 26, 2021

长沙市总人口810万

从通货膨胀看,我国M2已经到了190万亿元,会不会今后十年M2再翻两番?不可能,这两年国家去杠杆、稳金融已经做到了让M2的增长率大体上等于GDP的增长率加物价指数,这几年的GDP增长率百分之六点几,物价指数加两个点,所以M2在2017年、2018年都是八点几,2019年1—6月份是8.5%,基本上是这样。M2如果是八点几的话,今后十几年,基本上是GDP增长率加物价指数,保持均衡的增长。如果中国的GDP今后十几年平均增长率大体在5%—6%,房地产价格的增长大体上不会超过M2的增长率,也不会超过GDP的增长率,一般会小于老百姓家庭收入的增长率。

9万多个房产企业中,排名在前的15%大开发商在去年的开发,实际的施工、竣工、销售的面积,在17亿平方米里面它们可能占了85%。意思是什么呢?15%的企业解决了17亿平方米的85%,就是14亿多平方米,剩下的企业只干了那么2亿多平方米,有大量的空壳公司。

中国的房地产企业,我刚才说9万多个,9万多个房产商的总负债率2018年是84%。中国前十位的销售规模在1万亿元左右的房产商,它们的负债率也是在81%。

REITs: Real Estate Investment Trusts,译为房地产投资信托基金

地王的产生都是因为房产商背后有银行,所以政府的土地部门,只要资格审查的时候查定金从哪儿来,拍卖的时候资金从哪儿来,只要审查管住这个,就一定能管住地王现象的出现

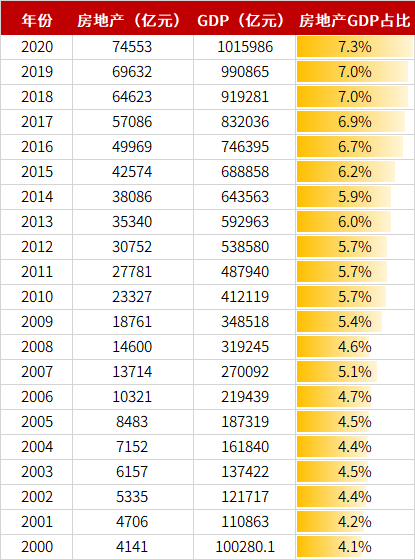

2000年,中国房地产增加值仅为4141亿元,在当年GDP中占比4.1%。二十年后,2020年中国房地产增加值跃升至74553亿元,GDP占比7.3%。在20年时间里,房地产增加值大涨70412亿元,增长率达78%。

房地产增加值从1万亿元增加到2万亿元用了5年,从2万亿元到3万亿元用了3年,从5万亿元到7万亿年只用了4年。房地产新创造价值的增长速度越来越快。

香港公租房面积:現時公屋單位的人均室內面積不得小於七平方米,惟房委會近年興建單位時,面積均僅停留在合格線,供一至二人入住的甲類單位,面積只及14平方米,二至三人的乙類單位也只有21平方米。 尤有甚者,有報章整理房委會資料,2020至2024年度的甲、乙類單位佔52%,總數3.44萬個,較跟2015至2019年度落成單位高11個百分點。Jan 19, 2021

对外开放

近40年以来世界贸易的格局,国际贸易的产品结构、企业组织和管理的方式,国家和国家之间贸易有关的政策均发生了重要的变化。货物贸易中的中间品的比重上升到70%以上,在总贸易量中服务贸易的比重从百分之几变成了30%。

产品交易和贸易格局的变化,导致跨国公司的组织管理方式发生变化,谁控制着产业链的集群、供应链的纽带、价值链的枢纽,谁就是龙头老大。由于世界贸易格局特征的变化,由于跨国公司管理世界级的产品的管理模式的变化,也就是“三链”这种特征性的发展,引出了世界贸易新格局中的一个新的国际贸易规则制度的变化,就是零关税、零壁垒和零补助“三零”原则的提出,并将是大势所趋。中国自贸试验区的核心,也就是“三零”原则在自己这个区域里先行先试,等到国家签订FTA的时候,自贸试验区就为国家签订FTA提供托底的经验。

现在一个产品,涉及几千个零部件,由上千个企业在几百个城市、几十个国家,形成一个游走的逻辑链,那么谁牵头、谁在管理、谁把众多的几百个上千个中小企业产业链中的企业组织在一起,谁就是这个世界制造业的大头、领袖、集群的灵魂。

能提出行业标准、产品标准的企业往往是产品技术最大的发明者。谁控制供应链,谁其实就是供应链的纽带。你在组织整个供应链体系,几百个、上千个企业,都跟着你的指挥棒,什么时间、什么地点、到哪儿,一天的间隙都不差,在几乎没有零部件库存的背景下,几百个工厂,非常有组织、非常高效地在世界各地形成一个组合。在这个意义上讲,供应链的纽带也十分重要。

50年前关税平均是50%—60%。到了20世纪八九十年代,关税一般都降到了WTO要求的关税水平,降到了10%以下。WTO要求中国的关税也要下降。以前我们汽车进口关税最高达到170%。后来降到50%。现在我们汽车进口关税还在20%的水平。但我们整个中国的加权平均的关税率,20世纪八九十年代是在40%—50%,到了90年代末加入WTO的时候到了百分之十几。WTO给我们一个过渡期,要求我们15年内降到10%以内。我们到2015年的确降到9.5%,到去年已经降到7.5%。现在整个世界的贸易平均关税已经降到了5%以内,美国现在是2.5%。

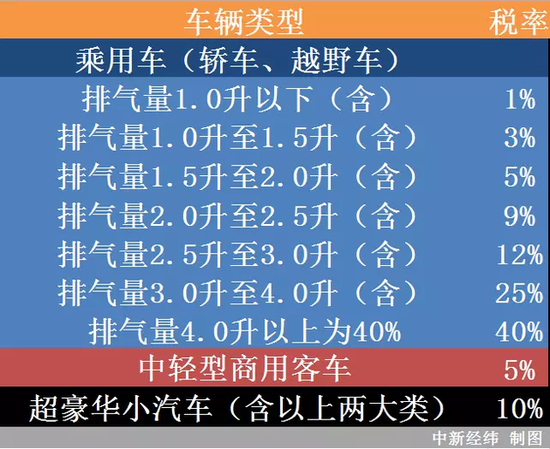

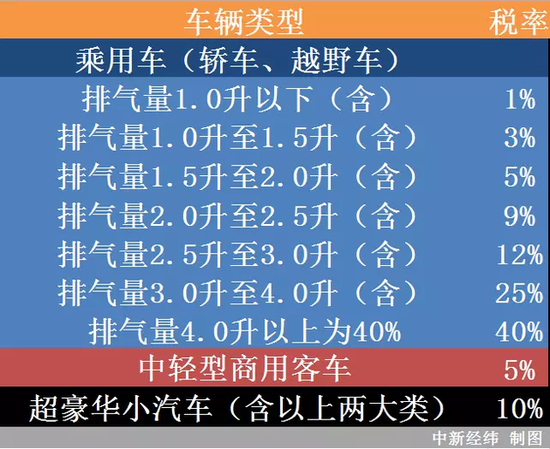

目前对于进口汽车收取的关税税率是25%,还有对进口车收取17%的增值税,而根据汽车的排量收取不同的消费税税率。 排量在在1.5升(含)以下3%,1.5升至2.0升(含) 5%,2.0升至2.5升(含) 9%,2.5升至3.0升(含)12%,3.0升至4.0升(含) 15%,4.0升以上20%。Jun 26, 2020

假定这辆到岸价24万的进口车为中规进口车(原厂授权,4S店销售),排气量为4.0以上,且到岸时间为5月1日前,套入相关计算公式,则其所要缴纳税费为:

关税:24万×25% =6万

消费税:(24万+6万)÷(1-40%)×40% =20万

增值税:(24万+6万+20万)×17% =8.5万

税费合计34.5万,加上24万的到岸价,总共58.5万,即这辆进口汽车的抵岸价。

常规而言,这个价格与90万指导价间的31.5万价差,即为运输等成本费用和国内经销商的利润。

在这七八年,FTA,双边贸易体的讨论,或者是一个地区,五六个国家、七八个国家形成一个贸易体的讨论就不断增加,成为趋势。给人感觉好像发达国家都在进行双边谈判,把WTO边缘化了

所谓自由贸易协定(Free Trade Agrement:FTA)是指两个或两个以上的国家(包括独立关税地区)根据WTO相关规则,为实现相互之间的贸易自由化所进行的地区性贸易安排。 由自由贸易协定的缔约方所形成的区域称为自由贸易区。 FTA的传统含义是缔约国之间相互取消货物贸易关税和非关税贸易壁垒。

2019年10月8日,日本驻美国大使杉山晋辅与美国贸易谈判代表莱特希泽在白宫正式签署新日美贸易协定。美国总统特朗普不仅亲自出席见证签字,还邀请多位西北部农业州农民代表参加,声称自己为美国农民赢得了巨大市场,巧妙地将国际贸易协定变成了国内拉票筹码。

中国已经形成了世界产业链里面最大的产业链集群,但是这个集群里面,我们掌控纽带的,掌控标准的,掌控结算枢纽的,掌控价值链枢纽的企业并不多。比如华为,华为的零部件,由3600多家大大小小供应链上的企业生产。这全球的3000多家企业每年都来开供应链大会。华为就是掌控标准。它的供应链企业比苹果多两倍。为什么?苹果主要做手机,华为既做手机又做服务器、通信设备。通信设备里面的零部件原材料更多。所以,它掌控产业链上中下游的集群,掌控标准,也掌控价值链中的牵制中枢。

零关税第一个好处:对进口中间品实行零关税,将降低企业成本,提高产品的国际竞争力。

当中国制造业实施零关税的时候,事实上对于整个制造业产业链的完整化、集群化和纽带、控制能力有好处,对于中国制造业的产业链、供应链、价值链在中国形成枢纽、形成纽带、形成集团的龙头等各方面会有提升作用,这是第二个好处。

关税下降,会促进中国的生产力结构的提升,促进我们企业的竞争能力的加强,使得我们工商企业的成本下降。

我们现在差不多有6.6亿吨农作物粮食是在中国的土地上生产出来的,但是我们现在每年要进口农产品1亿吨。加在一起,也就是中国14亿人,一年要吃7.6亿吨农作物。这1亿吨里面,有个基本的分类。我们现在进口的1亿吨里面,有8000多万吨进口的是大豆、300多万吨小麦、300多万吨玉米、300多万吨糖,另外就是进口的猪肉、牛肉和其他的肉类,也有几百万吨。

从2010年起步,当年人民币只有近千亿元的结算量,从这些年发展来看,2018年已经到7万亿元了。也就是说,中国进出口贸易里面有7万亿元人民币是人家收了人民币而不去收美元

自贸区

在过去的40年,我们国家的开放有五个基本特点:

第一个就是以出口导向为基础,利用国内的资源优势和劳动力的比较优势,推动出口发展,带动中国经济更好地发展;

第二个就是以引进外资为主,弥补我们中国当时还十分贫困的经济和财力;

第三个就是以沿海开放为主,各种开发区或者各种特区,包括新区、保税区,都以沿海地区先行,中西部内陆逐步跟进;

第四个就是开放的领域主要是工业、制造业、房地产业、建筑业等先行开放,至于服务业、服务贸易、金融保险业务开放的程度比较低,即以制造业、建筑业等第二产业开放为主;

第五个就是我们国家最初几十年的开放以适应国际经济规则为主,用国际经济规则、国际惯例倒逼国内营商环境改革、改善,倒逼国内的各种机制体制变化,是用开放倒逼改革的这样一个过程。

2012年以后我们每年退休的人员平均在1500万人左右,但每年能够上岗的劳动力,不管农村的、城市的,新生的劳动力是1200万左右。实际最近五年,我们每年少了300万劳动力补充。

本来GDP应该每掉1个点退出200万就业人口。为什么几年下来没有感觉到有500万、1000万下岗工人出现呢?就是因为人口出现了对冲性均衡,正好这边下降,要退出人员,跟那边补充的人员不足,形成了平衡,所以实际上我们基础性劳动力条件发生了变化,人口红利逐步退出。

如果一个国家在五到十年里,连续都是世界第一、第二、第三的进口大国,那一定成为世界经济的强国。要知道进口大国是和经济强国连在一起的,美国是世界第一大进口国,它也理所当然是世界最大的经济强国。

中美贸易战

关于中国加入WTO,莱特希泽有五个观点:一是中国入世美国吃亏论;二是中国没有兑现入世承诺;三是中国强制美国企业转让技术;四是中国的巨额外汇顺差造成了美国2008年的金融危机;五是中国买了大量美国国债,操纵了汇率。

2008年美国金融危机原因是2001年科技互联网危机后,当时股市一年里跌了50%以上,再加上“9·11”事件,美国政府一是降息,从6%降到1%,二是采取零按揭刺激房地产,三是将房地产次贷在资本市场1∶20加杠杆搞CDS,最终导致泡沫经济崩盘。2007年,美国房地产总市值24.3万亿美元、占GDP比重达到173%;股市总市值达到了20万亿美元、占GDP比重达到135%。2008年金融危机后,美国股市缩水50%,剩下10万亿美元左右;房地产总市值缩水40%,从2008年的25万亿美元下降到2009年的15万亿美元。

一个成熟的经济体,政府每年总有占GDP 20%—30%的财政收入要支出使用,通常会生成15%左右的GDP,这部分国有经济产生的GDP是通过政府的投资和消费产生的,美国和欧洲各国都是如此。比如2017年,美国的GDP中有13.5%是美国政府财力支出形成的GDP。中国政府除了财政税收以外,还有土地批租等预算外收入,所以,中国政府财力支出占GDP的比重相对高一点,占17%左右

自1971年布雷顿森林体系解体,美元脱离了金本位,形成“无锚货币”,美元的货币发行体制转化为政府发债,美联储购买发行基础货币之后,全球的基础货币总量如脱缰野马,快速增长。从1970年不到1000亿美元,到1980年的3500亿美元,到1990年的7000亿美元,到2000年的1.5万亿美元,到2008年的4万亿美元,到2017年的21万亿美元。其中,美元的基础货币也从20世纪70年代的几百亿美元发展到今天的6万亿美元。

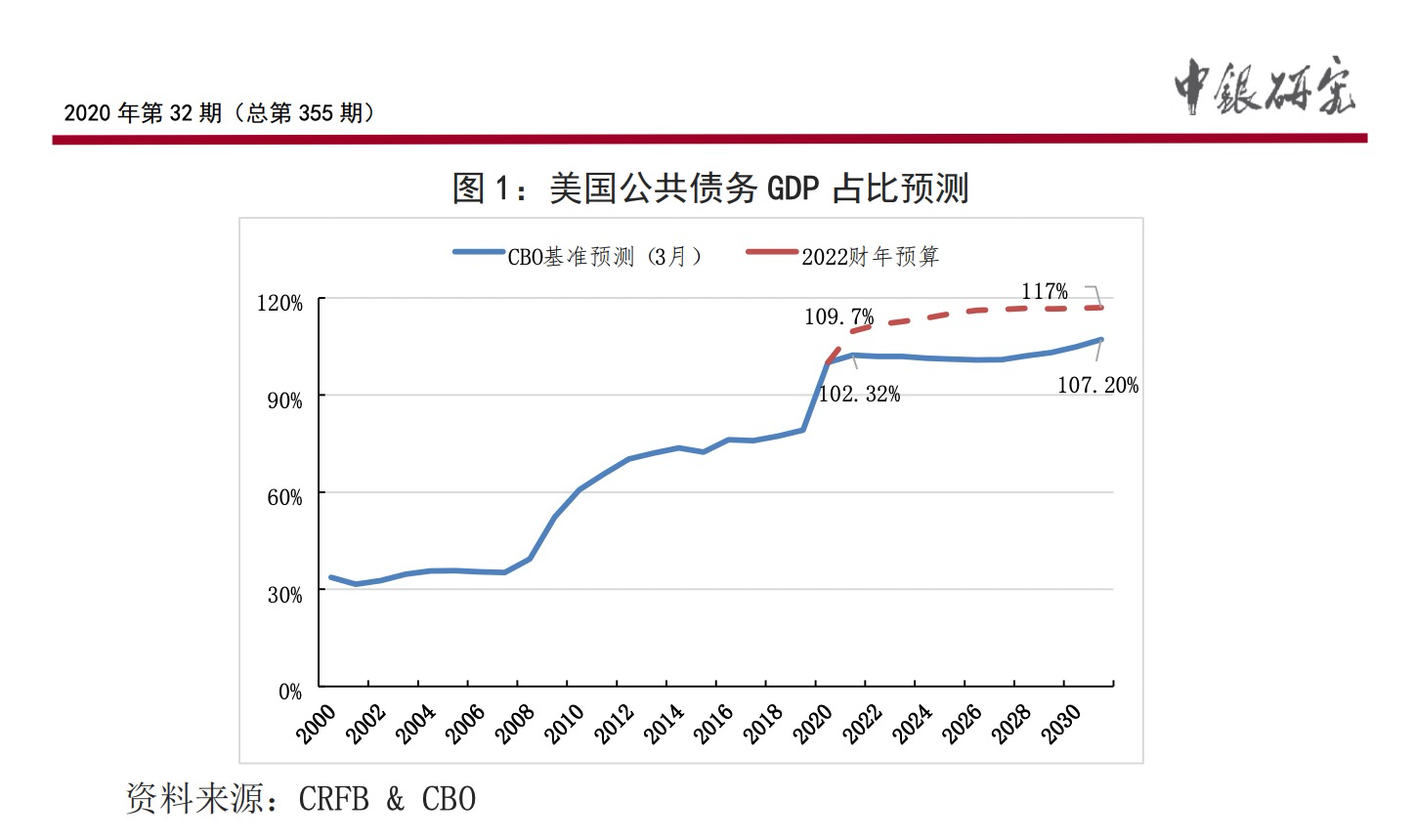

报告还预计,截至2021财年底,美国联邦公共债务将达23万亿美元,约占美国GDP的103%;到2031财年,美国联邦公共债务占GDP的比重将进一步升至106%。Jul 2, 2021

以下资料来源:https://pdf.dfcfw.com/pdf/H3_AP202106171498408427_1.pdf?1623944100000.pdf

2020-01

上市公司几千家,几十家金融企业每年利润几乎占了几千家实体经济企业利润的50%,这个比重太高,造成我们脱实向虚。三是金融企业占GDP的比重是百分之八点几,是全世界最高的。世界平均金融业GDP占全球GDP的5%左右,欧洲也好、美国也好、日本也好,只要一到7%、8%,就会冒出一场金融危机,自己消除坏账后萎缩到5%、6%,过了几年,又扩张达到7%、8%,又崩盘。

SWIFT 是 Society for Worldwide Interbank Financial Telecommunications 的缩写,翻译成中文叫做「环球银行金融电讯协会」。看名字就知道,他是个搞通讯的,还冠冕堂皇的是一个非盈利性组织。

另外,SWIFT 官网上是这么用中文介绍自己的:SWIFT 为社群提供报文传送平台和通信标准,并在连接、集成、身份识别、数据分析和合规等领域的产品和服务(我一字未改,这多语言做的……语句都不通顺,好在不影响理解);用英文则是这么介绍的:SWIFT is a global member-owned cooperative and the world’s leading provider of secure financial messaging services。