等额本息和等额本金以及提前还贷误区

等额本息和等额本金以及提前还贷误区

1 等额本金和等额本息的差异

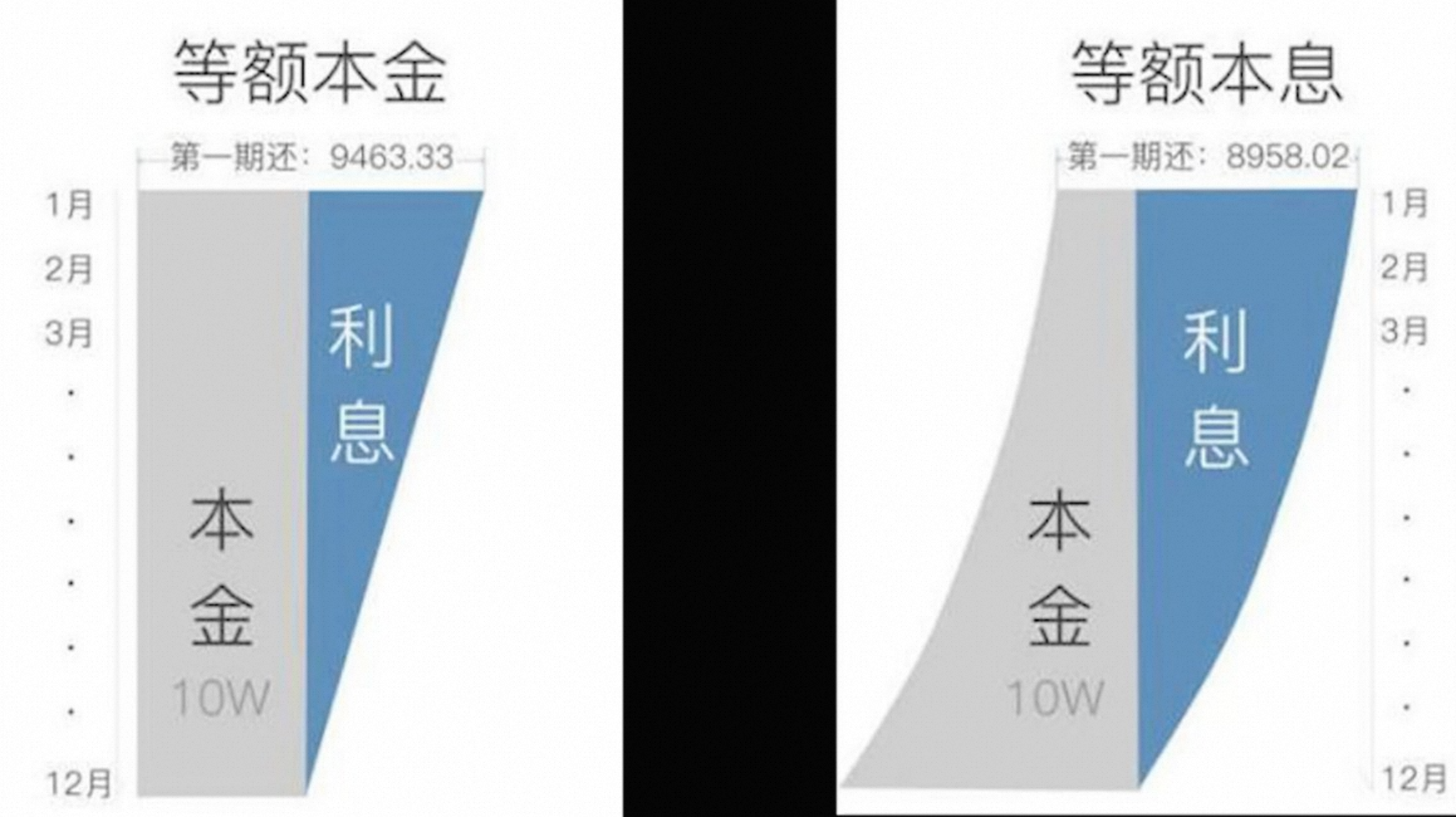

没有差异。等额本金=等额本息+每月提前还贷一点点()

2 为什么等额本金总利息少

因为每个月等额本金还款多,第一个月后欠本金少了,后面每个月都是这样,所还本金更多,最终总利息自然更少

其实可以用极限思维来分析他们的差异:假设等额本息和等额本金都借100万,周期一个月(没看错,一个月还清,极限假设),所以一个月后他们还钱一样多!所以这个时候没有任何区别;现在继续假设,假如还款周期是2个月,那么等额本金在第一个月还钱多,导致等额本金在第二个月的时候欠钱少了,到第二个月月底还清所有欠款的时候利息要少(本金少了)——这才是他们的差异,所以是没区别的。等额本金这两个月相当于欠了银行150万一个月(第一个月欠100万,第二个月欠50万) 应还利息就是150万 乘以 月利率;等额本息相当于欠了银行 151万(第一个月欠100万,第二个月51万,因为第一个月还钱的时候只还了49万本金),所以应还利息就是 151万 乘以 月利率;欠得多利息就多天经地义这里没有投机、没有人吃亏

再或者换个思路:第一个月借100万,月底都还清,此时利息一样多;然后再借出来99.X万,这时等额本金借得少这个X更小,所以从第二个月开始等额本金还的利息少,归根结底换利息少是因为借得少(即现实中的还本金多)

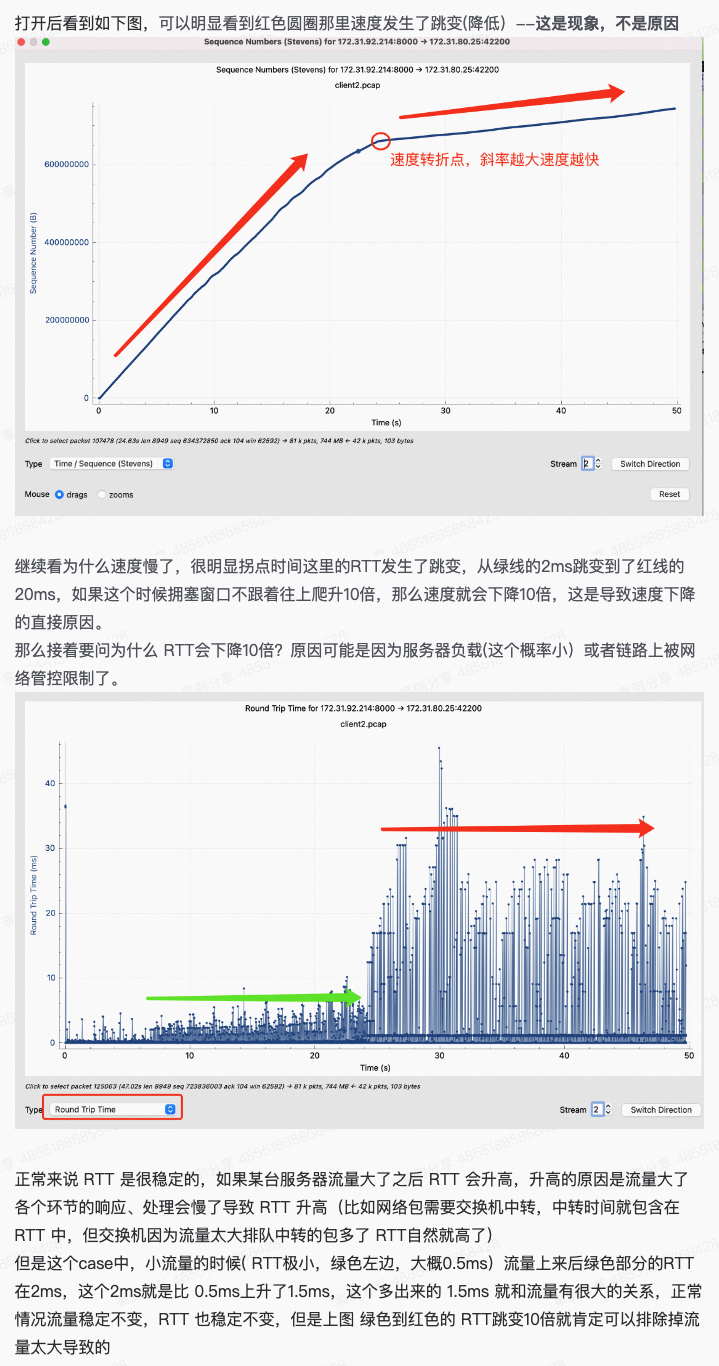

把上面的极限思维图形化就是下图中的灰色部分面积代表你的欠款(每个月的欠款累加),等额本息的方式欠得多,自然利息多:

3 为什么总有等额本金划得来的说法

同样贷款金额下等额本金总还款额少,也就是总利息少,所以给了很多人划得来的感觉,或者给人感觉利息便宜。其实这都是错的,解释如上第二个问题

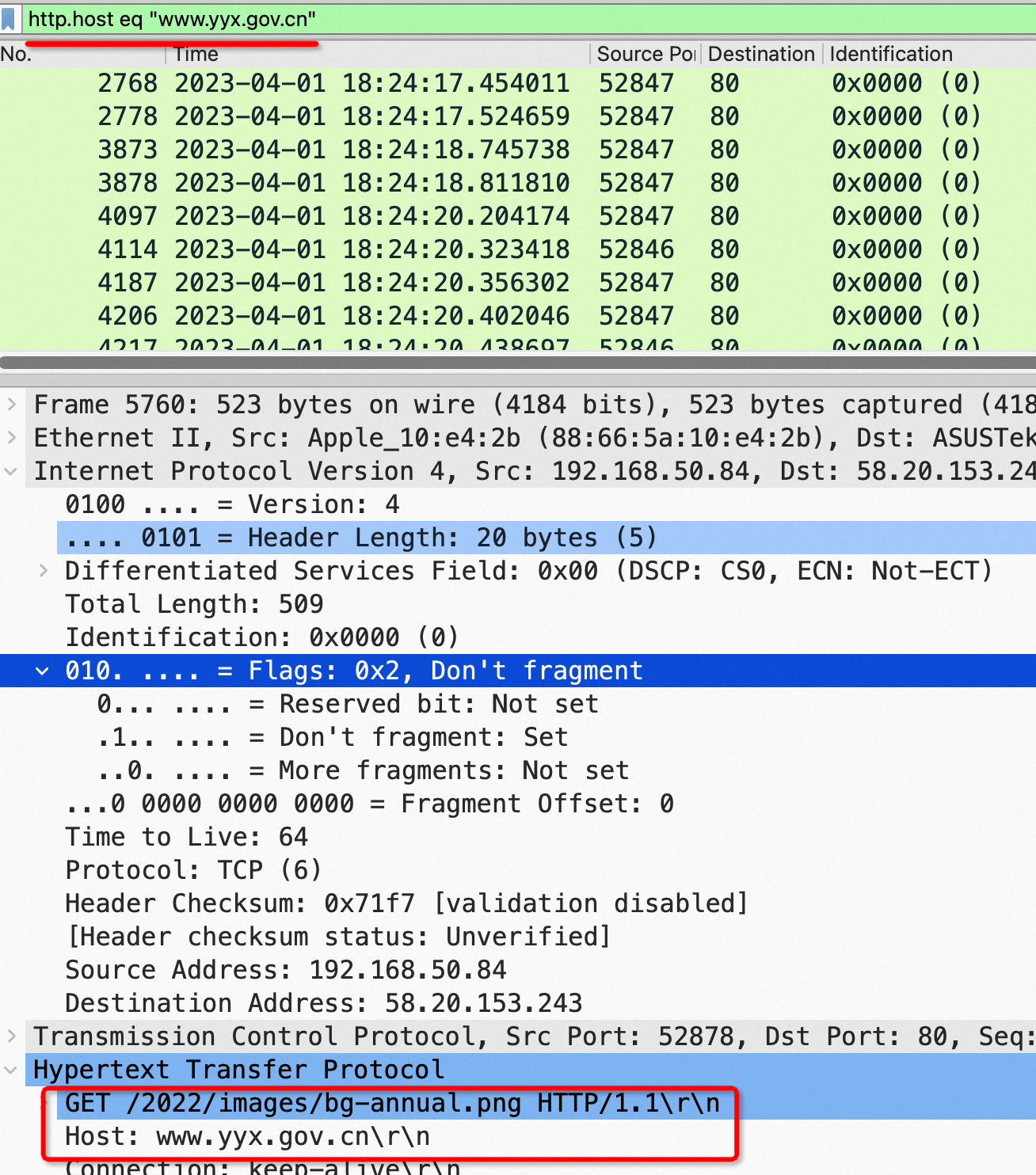

4 利息的计算方式

利息每个月计算一次,这个月所欠本金*月利率。所以利息只和你欠钱多少有关(我们假设所有人的利率都一样)。每个月的月供,都是先还掉这个月的利息,多余的再还本金

等额本金因为每个月还掉的本金多,所以计算下来每个月的利息更少

5 如何理解等额本金和等额本息

同样贷款额+利率的话等额本金开始还款一定比等额本息要多一些,那么你可以把等额本金分成两块,一块和等额本息一样,多出来的部分你把他看成这个月额外做了一次提前还款。你提前还款了后面的总利息自然也会更少一些,然后每个月的等额本息也会减少,以此类推每个月都是这样额外做一次提前还款直到最后一个月。

总结:等额本金=等额本息+提前还贷

额本金开始还款一定比等额本息要多一些,可以把等额本金分成两块,一块和等额本息一样,多出来的部分把他看成这个月额外做了一次提前还款。提前还款后总利息也会更少一些,然后每个月的等额本息也会减少,以此类推每个月都是这样额外做一次提前还款直到最后一个月。

6 提前还款划不划得来

钱在你手里没法赚到比利息更高的收益的话(99%的人属于这种)提前还贷划得来,之所以以前不建议大家提前还贷,是因为以前普遍涨薪快、通胀厉害、房价涨得块,把钱留出来继续买二套、三套更赚钱。另外钱留在手里会有主动权和应急方便

7 提前还贷会多付利息吗?

不会,见第四条利息的计算方式。担心提前还贷的时候这比贷款把后面10年的利息收走了的是脑子不好使的人。但有些银行提前还贷会有违约金

8 为什么等额本金和等额本息给了这么多人错觉

从知识的第一性出发,他们都没理解第4条,受社会普遍意识影响都预先留下了错误经验。本质就是利息只和贷款额、利率有关。

9 贷款30年和10年利率有差异吗?

没有

10贷款30年,还了6年了,然后一次还清所有欠款会多还利息吗?

不会,你前6年只是还了前6年的利息,没为之后的6年多付一分利息

11 那为什么利率不变我每个月利息越来越少

因为你欠的钱越来越少了,不是你提前还了利息,是你一直在还本金

12 网购分期合适吗?

大部分小贷公司的套路就是利用你每个月已经在还本金的差异,利息自然越来越少,然后计算一个大概5%的年息误导你,实际这种网购分期的实际利息要是他计算的2倍……好好想想

等额本金、本息都搞不清楚的人就不要去搞分期了,你的脑瓜子在这方面不好使。

聪明人只看第4条就能在大脑里得出所有结论,普通人除了要有第4条还需要去看每个月的还款额、还款额里面的本金、还款额里的利息等实践输入才能理解这个问题,这就是差异

https://zhuanlan.zhihu.com/p/161405128

提前还贷

要不要提前还贷

要

提前还贷划得来吗?

划不划得来要看这笔钱在你手里能否获得比房贷利息更高的收入以及你对流动资金的需要。其实万一有个啥事也可以走消费贷啥的,现在利息都很低

等额本息比等额本金提前还贷更划得来?

一样的,你这个问题等价于我欠100万,老婆欠50万,所以我提前还贷比朋友合算吗?显然你两谁提前还贷合算只和你两的贷款利率是否有差别

等额本息和等额本金利率一样的,所以没有差别

20年的房贷我已经还了15年了是不是不值得提前还贷了?

错误!利率还是那个利率,跟你还剩10年和还剩5年没关系,提前还贷都是等价的。记住有闲钱就提前还

问题的本质

搞清楚每个月的利息怎么计算出来的 利息=欠款额*月利率,月供都是把本月利息还掉多出来的还本金,也就是每个月欠款额会变少

总结

每个领域都有一些核心知识点代表着这些问题的本质,你只有把核心知识点或者说问题本质搞懂了才能化繁为简、不被忽悠!

那贷款这里的本质是什么?就是利息只和你每个月欠款以及利率有关!这简直是屁话,太简单了,但你不能理解他,就容易被套上等额本金、等额本息、提前还贷的外壳给忽悠了。

再或者说这里的本质就是:你去搞清楚每个月的还款是怎么计算的。月供=本月所欠X利率+本月还掉的本金 这是个核心公式,差别在每个月还掉的本金不一样!

就这样吧该懂的也该看懂了,看不懂的大概怎么样也看不懂!只能说是蠢,这些人肯定理科不好、逻辑不行,必定做不了程序员。

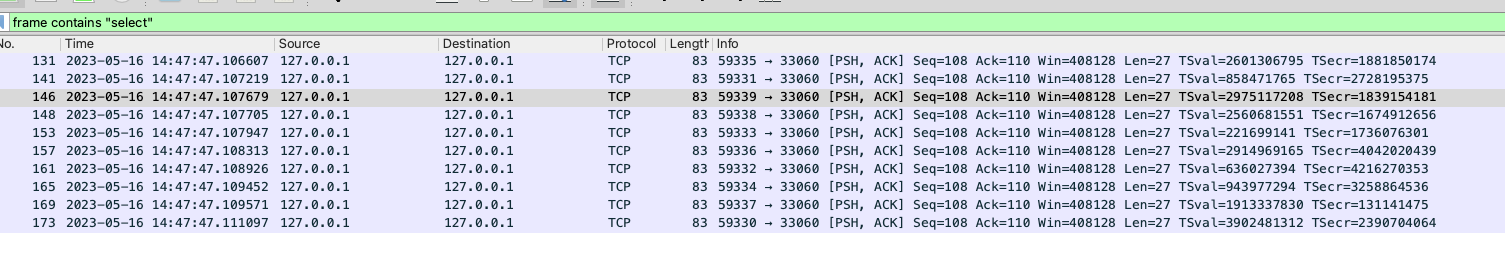

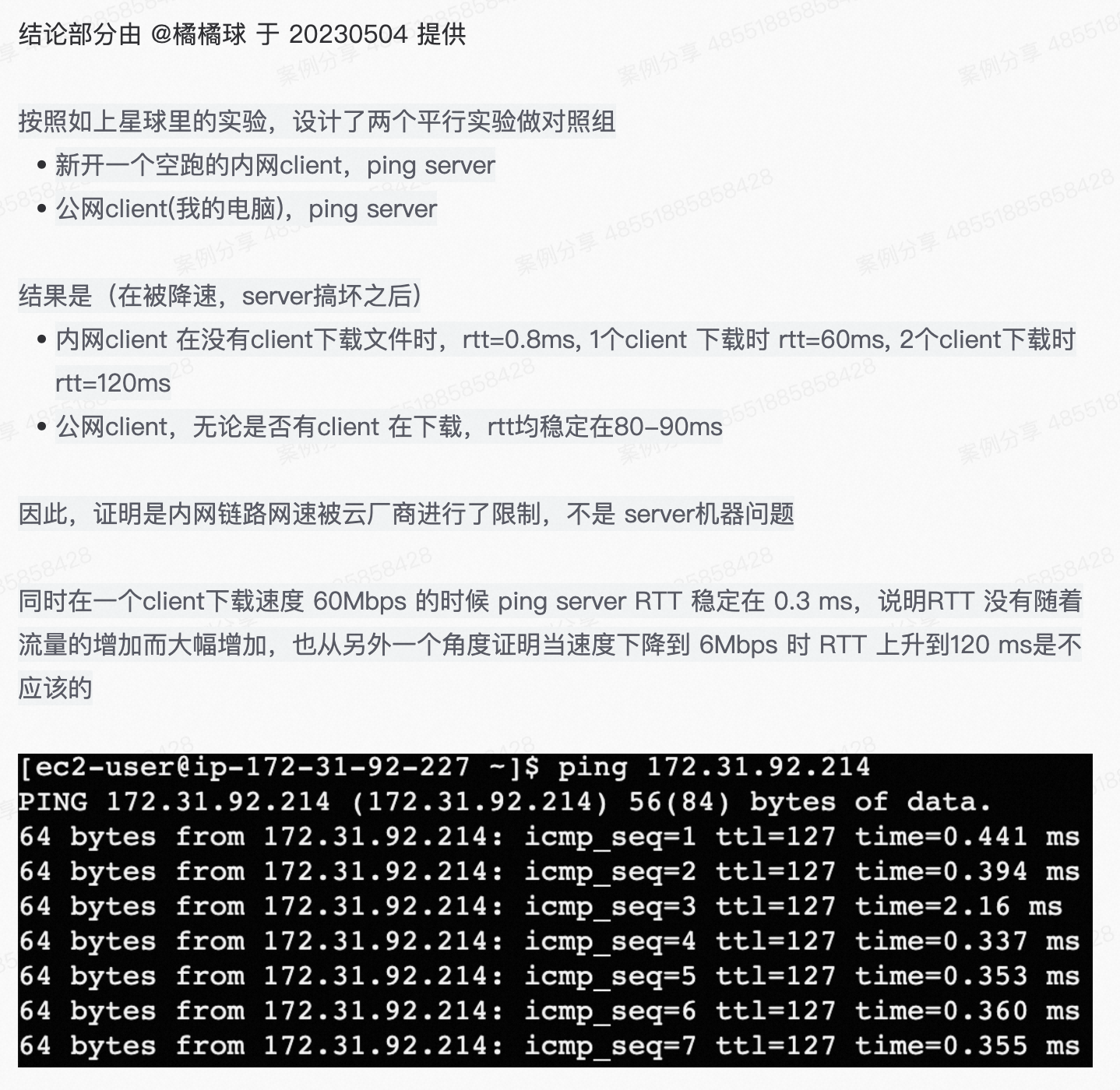

比如网上流传的如图总结的所有结论都是错的: